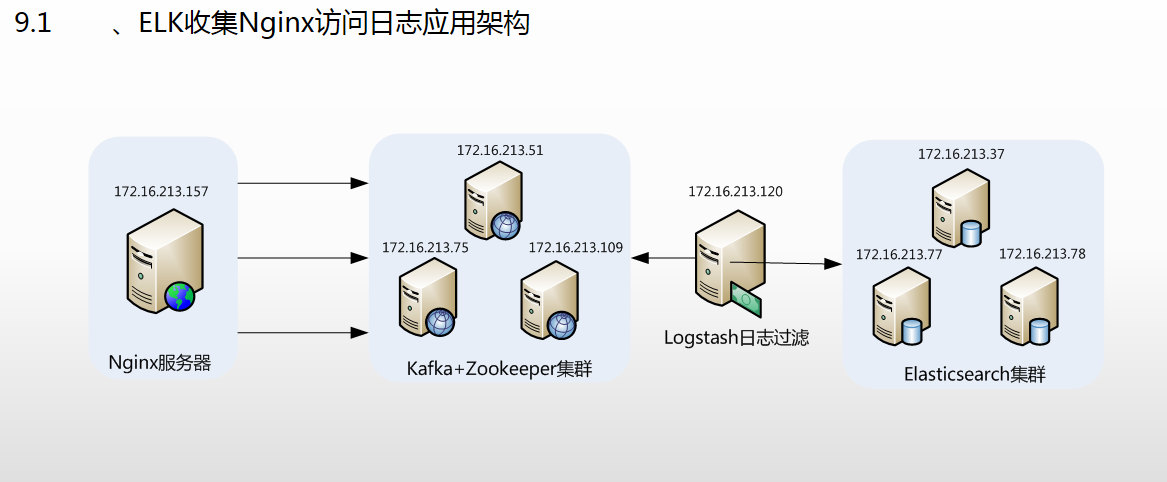

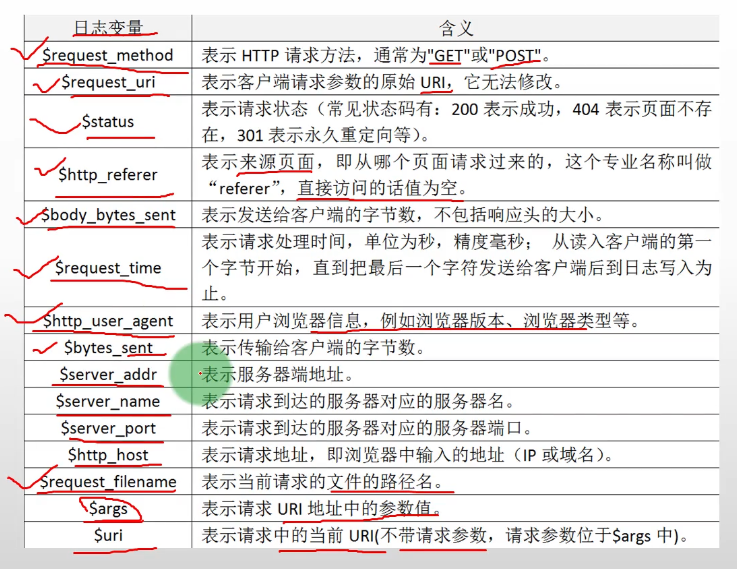

Nginx的日志格式与日志变量

- Nginx跟Apache一样,都支持自定义输出日志格式,在进行Nginx日志格式定义前,先来了解一下关于多层代理获取用户真实IP的几个概念。

- remote_addr:表示客户端地址,但有个条件,如果没有使用代理,这个地址就是客户端的真实IP,如果使用了代理,这个地址就是上层代理的IP。

- X-Forwarded-For:简称XFF,这是一个HTTP扩展头,格式为 X-Forwarded-For: client, proxy1, proxy2,

- 如果一个HTTP请求到达服务器之前,经过了三个代理 Proxy1、Proxy2、Proxy3,IP 分别为 IP1、IP2、IP3,用户真实IP为 IP0,

- 那么按照 XFF标准,服务端最终会收到以下信息:X-Forwarded-For: IP0, IP1, IP2

- 由此可知,IP3这个地址X-Forwarded-For并没有获取到,而remote_addr刚好获取的就是IP3的地址。

1 | 1. $remote_addr:此变量如果走代理访问,那么将获取上层代理的IP,如果不走代理,那么就是客户端真实IP地址。 |

自定义Nginx日志格式

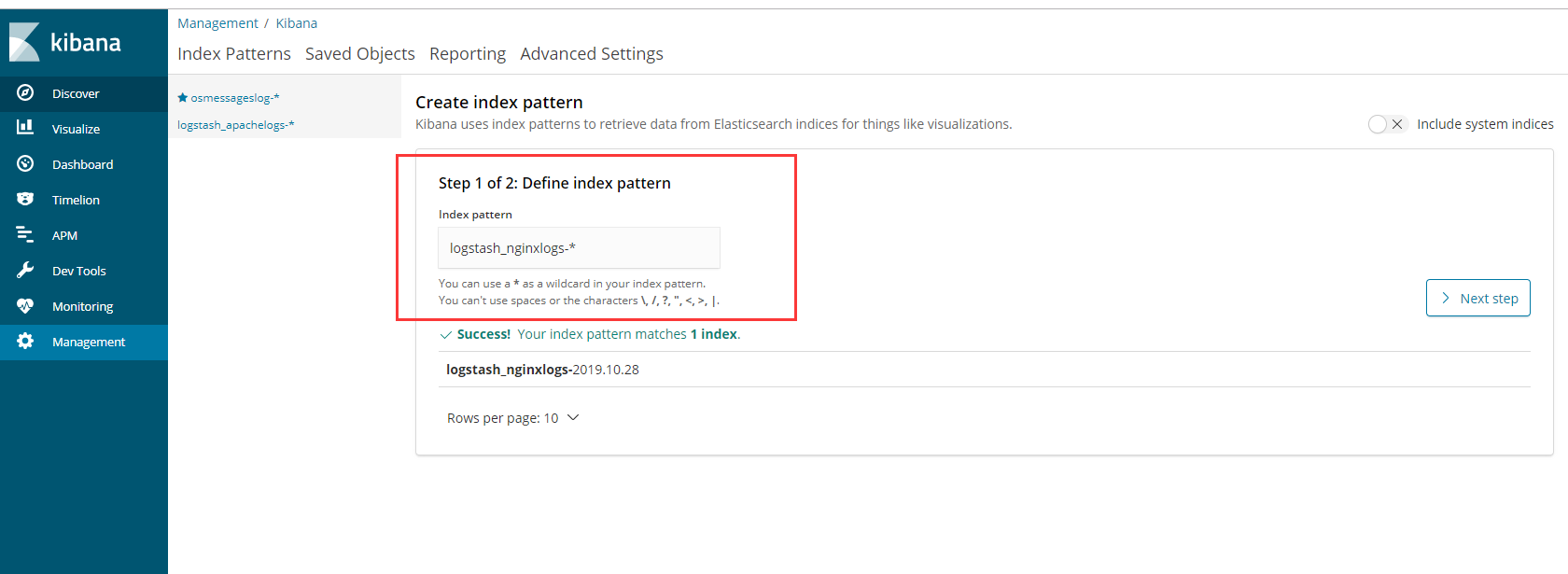

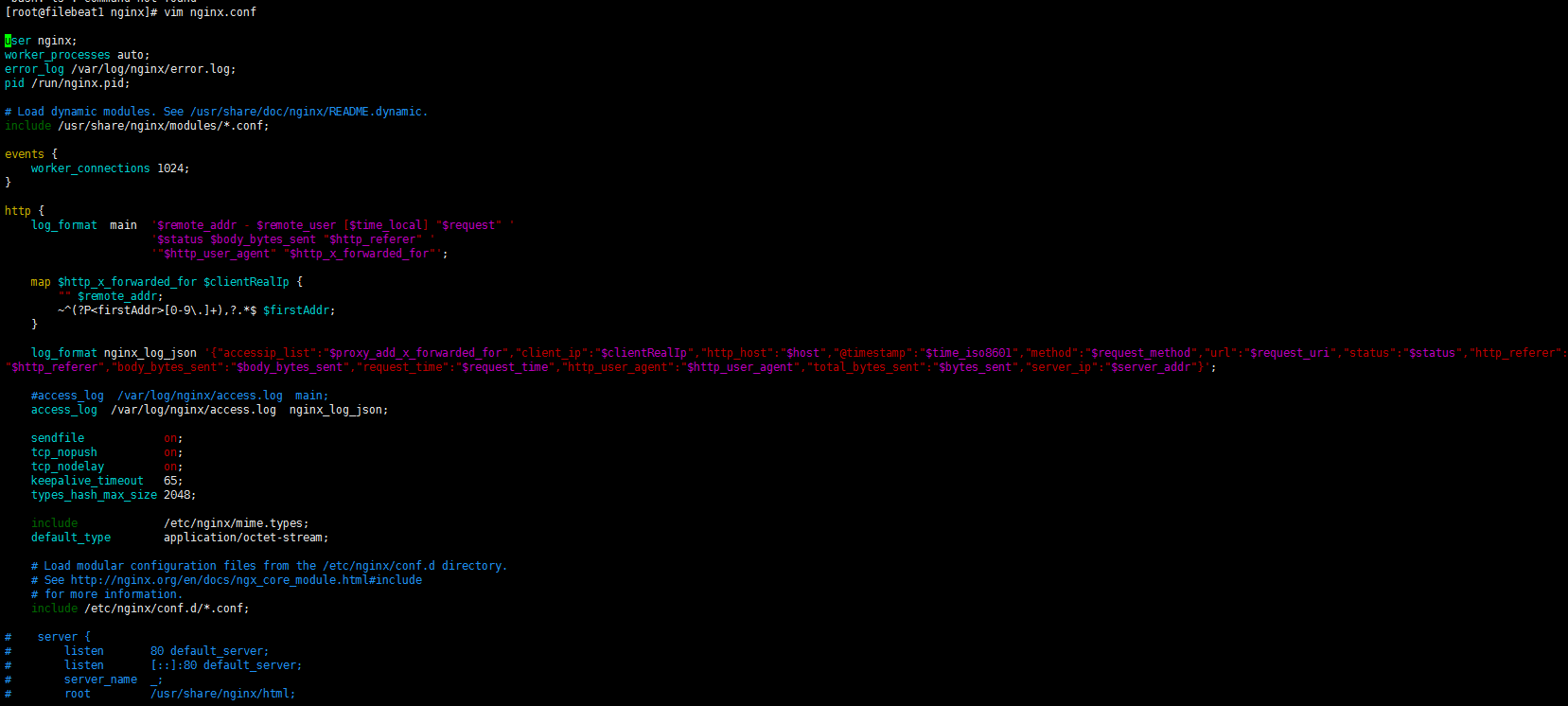

- 掌握了Nginx日志变量的含义后,接着开始对它输出的日志格式进行改造,这里我们仍将Nginx日志输出设置为json格式,

- 下面仅列出Nginx配置文件nginx.conf中日志格式和日志文件定义部分,定义好的日志格式与日志文件如下:

1 | # 当 $http_x_forwarded_for == "" 时,就把$remote_addr 赋值给$clientRealIp |

2层的代理配置

1 | 本机 172.17.70.235 |

1 | [root@filebeat1 conf.d]# vim /etc/nginx/conf.d/test.conf |

1 | # 2级代理 172.17.70.230 |

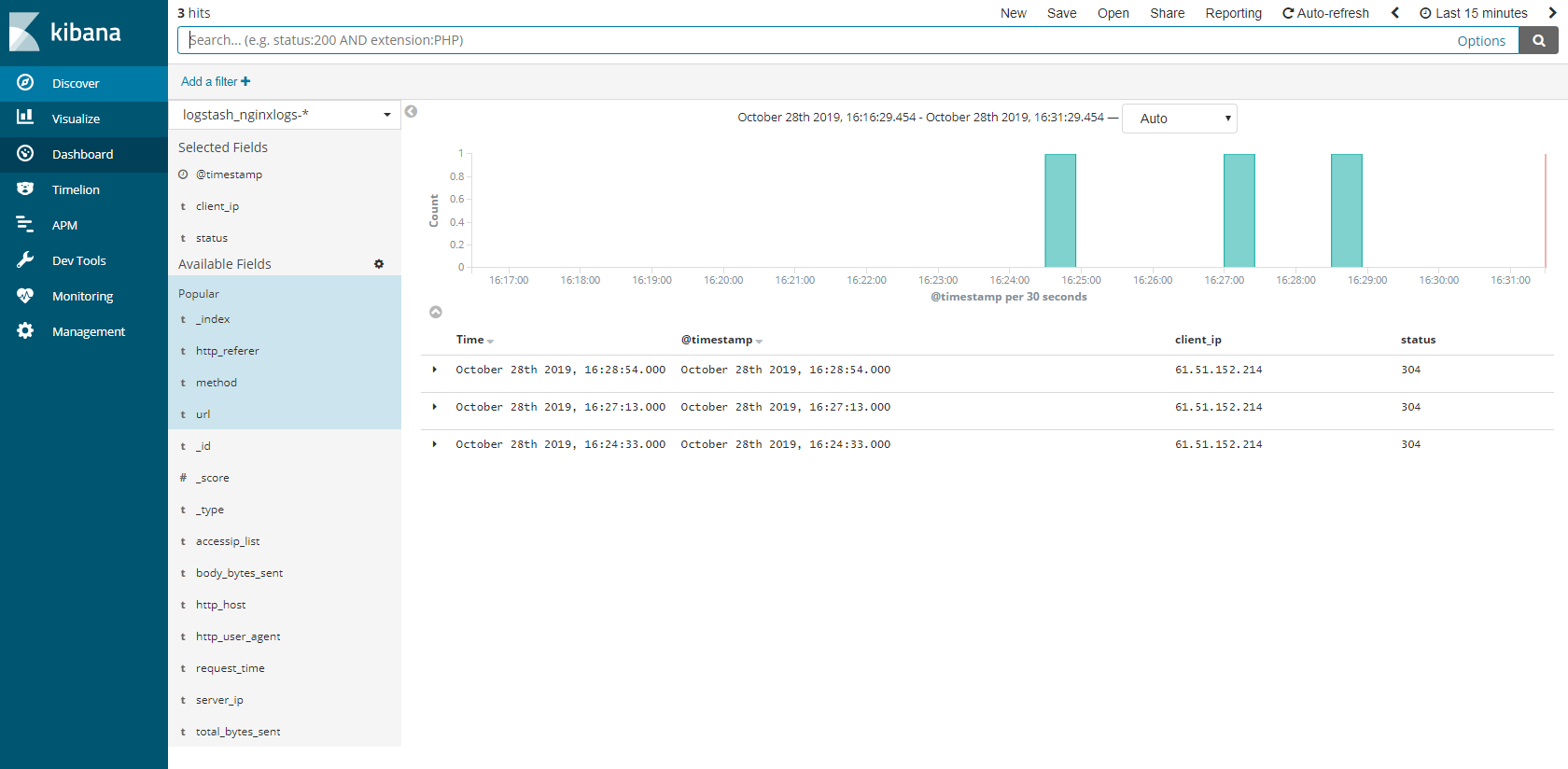

观察日志

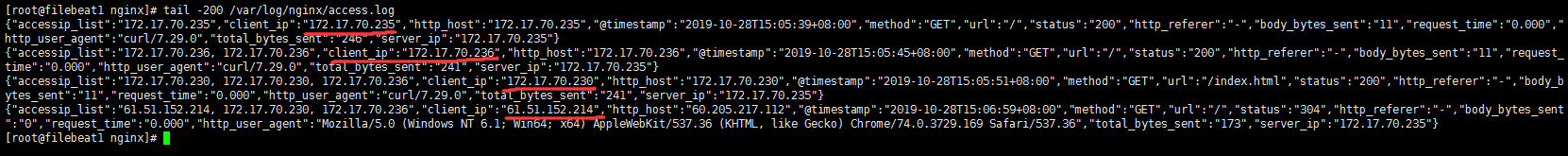

1 | [root@filebeat1 nginx]# tail -200 /var/log/nginx/access.log |

- 在这个输出中,可以看到,client_ip和accessip_list输出的异同,client_ip字段输出的就是真实的客户端IP地址,

- 而accessip_list输出是代理叠加而成的IP列表,

- 第一条日志,是直接访问http://1172.17.70.235 不经过任何代理得到的输出日志,

- 第二条日志,是经过一层代理访问172.17.70.236 而输出的日志,

- 第三条日志,是经过二层代理访问172.17.70.230 得到的日志输出。

- 最后是通过本地公网访问。

- Nginx中获取客户端真实IP的方法很简单,无需做特殊处理,这也给后面编写logstash的事件配置文件减少了很多工作量。

配置 filebeat

- filebeat是安装在Nginx服务器上的

1 | # 将之前的apache修改一下即可 |

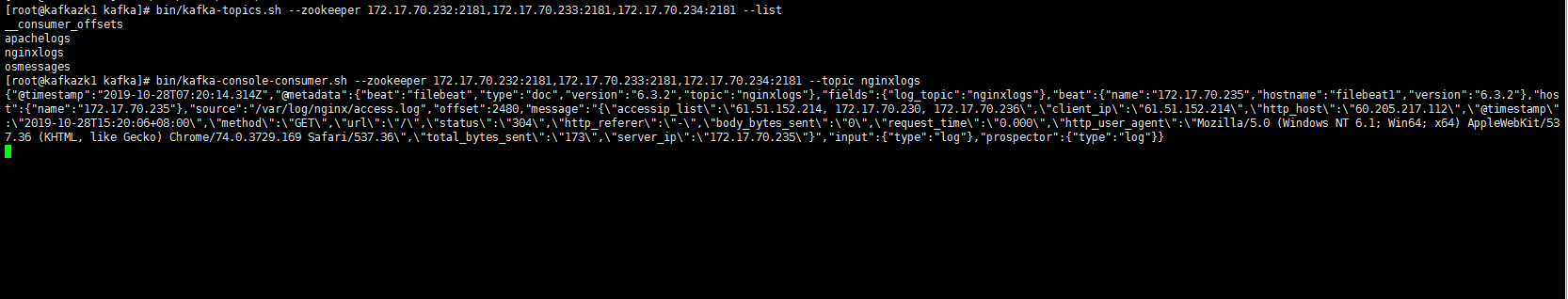

kafka 测试接收

1 | # 启动filebeat |

配置 logstash

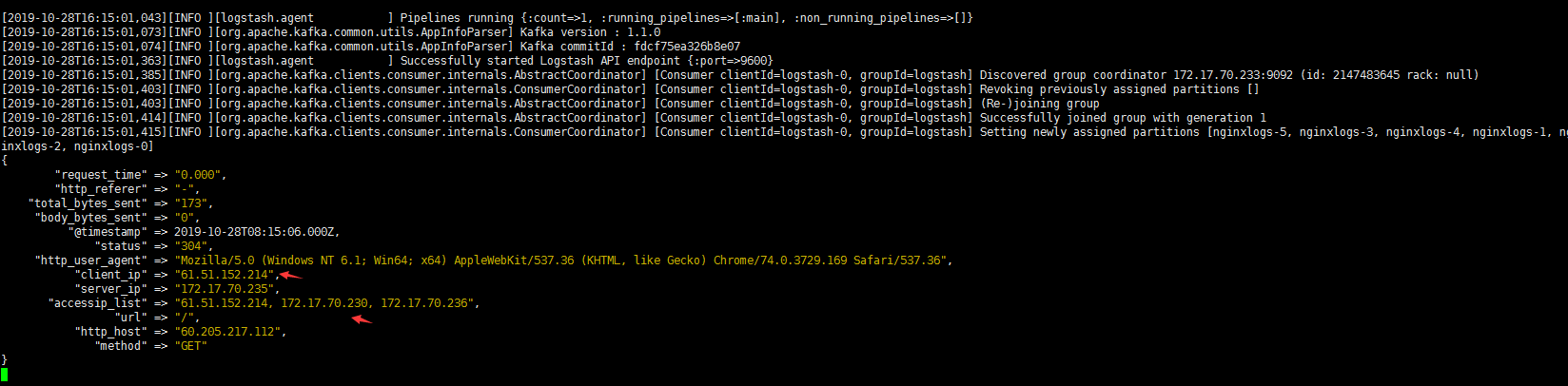

- 由于在Nginx输出日志中已经定义好了日志格式,因此在logstash中就不需要对日志进行过滤和分析操作了,

- 下面直接给出logstash事件配置文件kafka_nginx_into_es.conf的内容:

- apache需要自己定义获取真实客户端IP,Nginx通过map实现了。

1 | [root@logstash logstash]# vim kafka_nginx_into_es.conf |

1 | [root@logstash logstash]# bin/logstash -f kafka_nginx_into_es.conf |

输出到 ES集群

1 | # 用于判断,跟上面input中[@metadata][myid]对应,当有多个输入源的时候, |

1 | # 后台运行 |