生产环境K8S平台规划

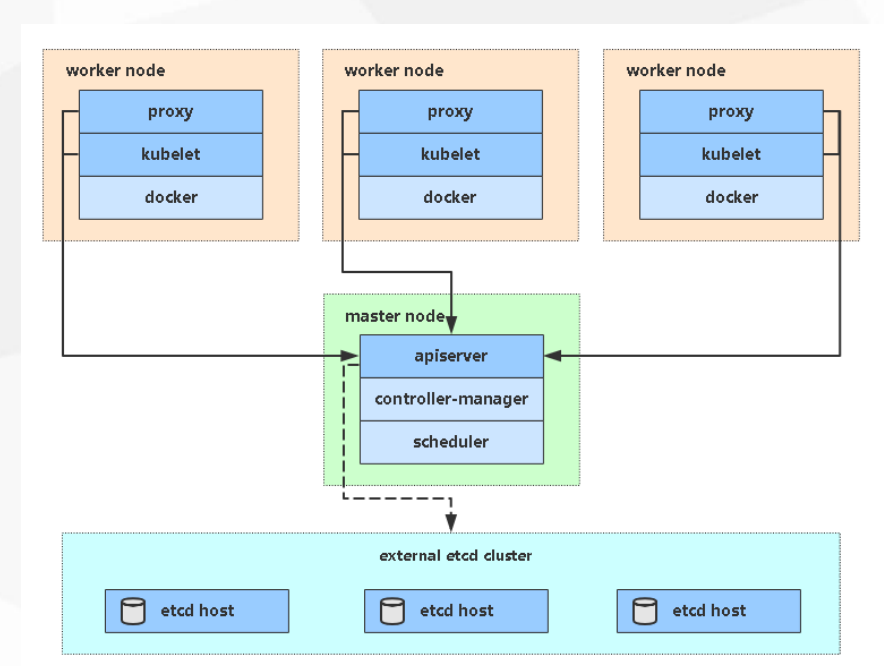

单Master集群

- 一个master,存在单点故障,master节点出现问题无法创建和管理应用,已经部署的还是可以工作

- etcd可以独立部署(集群部署),只要k8s能连接etcd即可

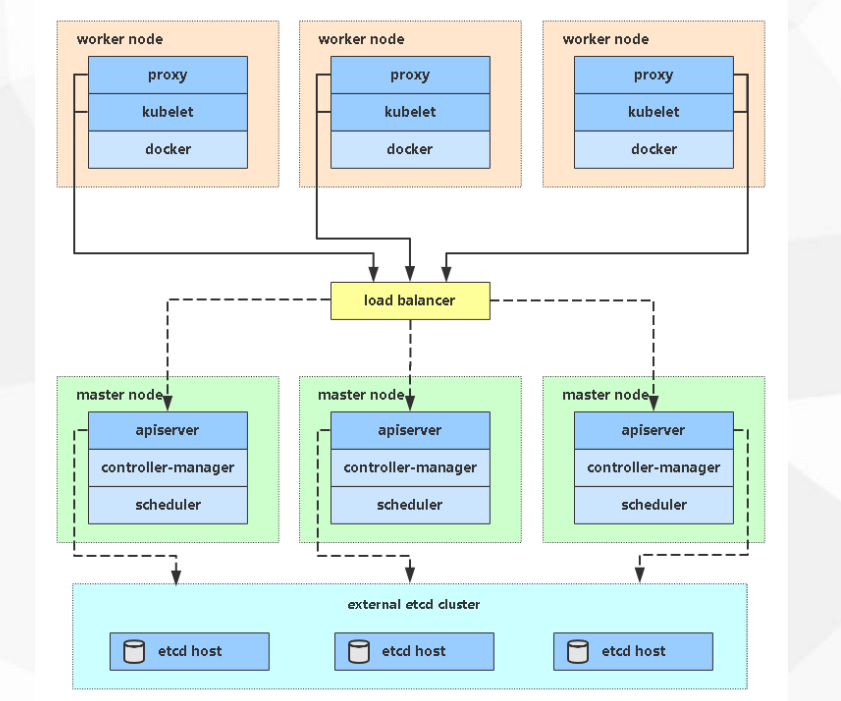

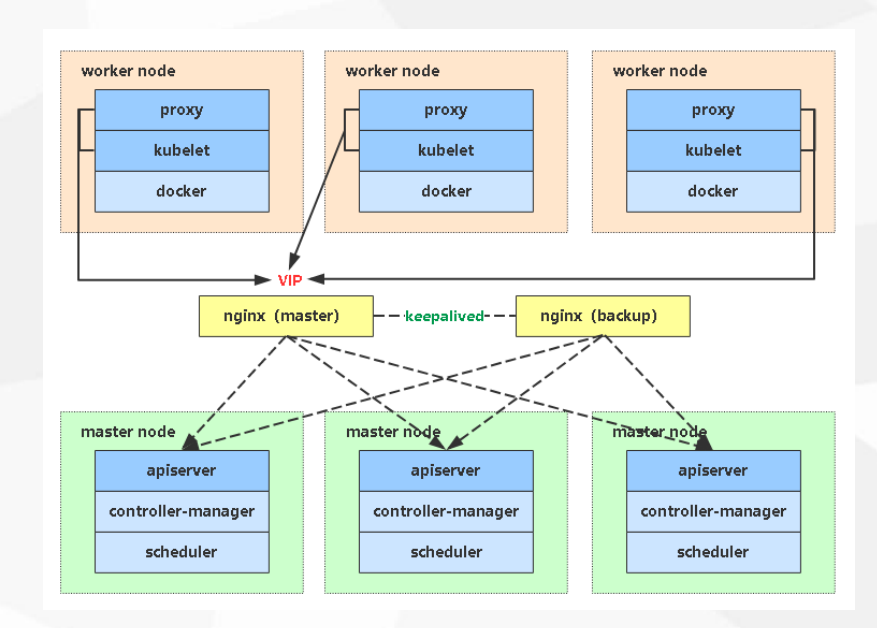

多Master集群(HA)

- 多个master如何工作,前面使用负载均衡

- 重点在apiserver ,node改成连接LB负载均衡

- 所有请求不会直接到某个 apiserver中,而是先到LB再分配

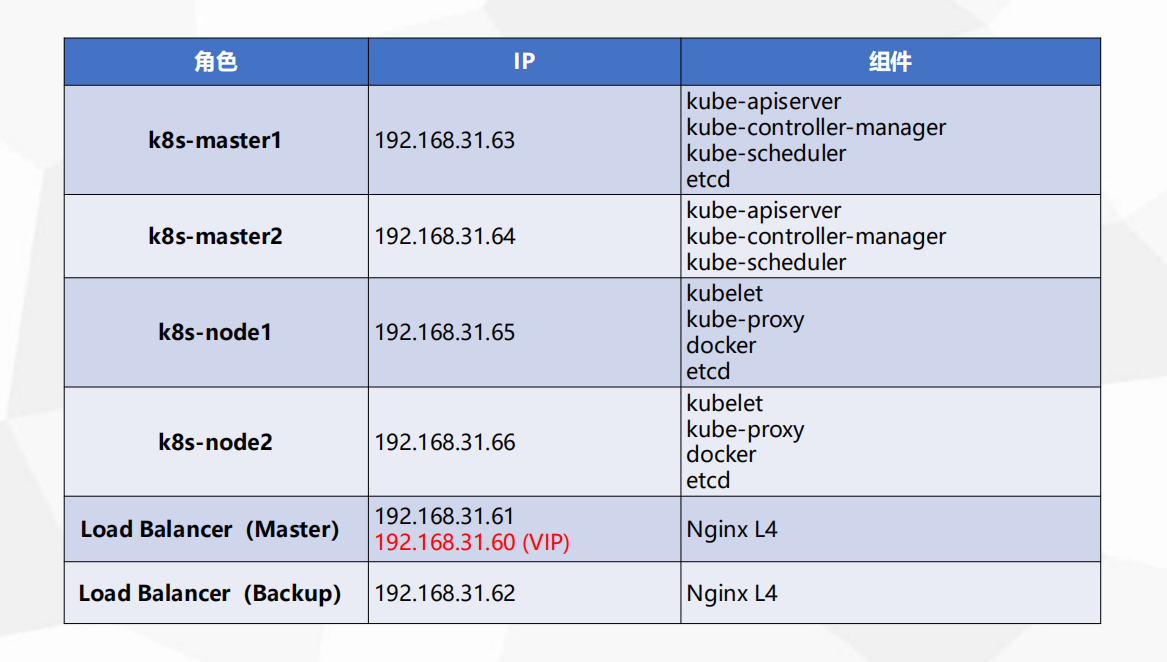

- 测试环境 使用单master集群

- 单master 2个node

- etc集群部署分别部署在3台里

生产环境 必须使用多master,生产环境绝对不允许出现单点故障

- 按照下面的生产环境准备,node横向扩容

- 复用etcd的话 至少6台

- 机器多 etcd也独立部署

- node 配置要高

生产环境 K8S 平台规划

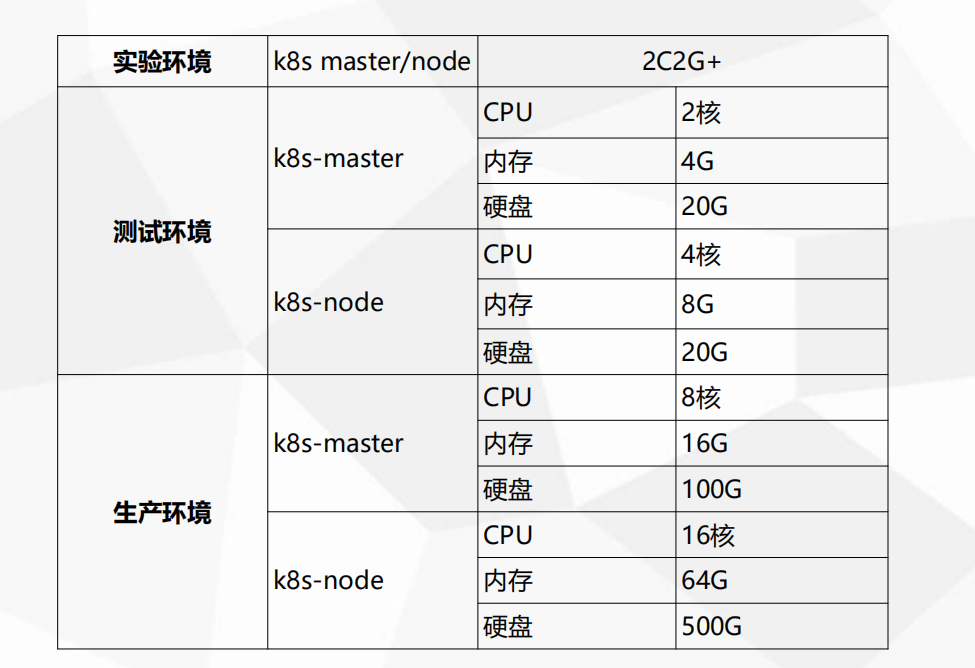

服务器硬件配置推荐

- node 不够可以水平扩展

- master很少扩展,2-3台就足够了

- 集群中每台服务器至少预留30%的资源,跑满80%的资源就要考虑扩展

官方提供的三种部署方式

- minikube

- Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。

- 部署地址:https://kubernetes.io/docs/setup/minikube/

- kubeadm

- Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

- 部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

- 二进制

- 推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

- 下载地址:https://github.com/kubernetes/kubernetes/releases

单master集群搭建

系统初始化

1 | 关闭防火墙: |

etcd 集群

理解ssl证书

- 集群中的通信都是基于https进行交互

- 当前架构中,etcd之间需要https通信,k8s内部master(apiserver) 也需要通信, 需要两条证书

- 证书分为: 自签证书 和 权威机构颁发的证书(赛门铁克) 3000元 www.ctnrs.com 泛域名*.ctnrs.com 比较贵

- 根证书

- 自签证书 不受信任 内部服务之间 具备一定加密 内部之间程序调用不对外,可以使用

- 证书包括:crt(数字证书) key(私钥) 配置到web服务器

- 权威机构颁发的证书 https是绿色的安全的,自签是!感叹号不可信任

- 权威证书如果使用到k8s里,其实也不知道是否可行,因为证书里涉及到可信任的IP列表,可能有影响

- https -> CA -> crt|key

生成etcd证书

- 可在任意节点完成以下操作。

使用cfssl工具自签证书

- cfssl 与 openssl一样都可以生成证书

- cfssl 使用json文件内容生成,比较直观

1 | [root@k8s-master opt]# ls |

1 | [root@k8s-master opt]# cd TLS |

1 | # 移动到可执行目录 |

1 | [root@k8s-master etcd]# cd /opt/TLS/etcd/ |

1 | # 自建CA |

1 | # 为哪个域名去颁发证书 |

1 | # 向CA申请证书 |

etcd 简介

- CoreOS 开源

- etcd首先是一个键值存储仓库,用于配置共享和服务发现。

- etcd 负责保存 Kubernetes Cluster 的配置信息和各种资源的状态信息。当数据发生变化时,etcd 会快速地通知 Kubernetes 相关组件。

- 官方推荐奇数节点部署,常见的有3 5 7 分别对应 1 2 3个冗余节点

- 3台etcd会先选举出1台为主节点,负责写消息,主节点同步给2个从节点。

- 当主节点挂了,两台从节点会选举出一台新的主节点

1 | # 介绍文档 |

1 | # 优点 |

部署三个 etcd 节点

1 | # 环境 |

1 | # 二进制包下载地址 |

1 | # 查看工作目录 |

1 | # 查看配置文件 |

1 | # 将证书加入到 ssl 里 |

1 | # 部署所有节点 |

1 | # 然后在进去修改相应的配置 主要是 ip 和 ETCD_NAME |

启动etcd

1 | # 三台节点都需要操作 |

1 | # etcd 日志 |

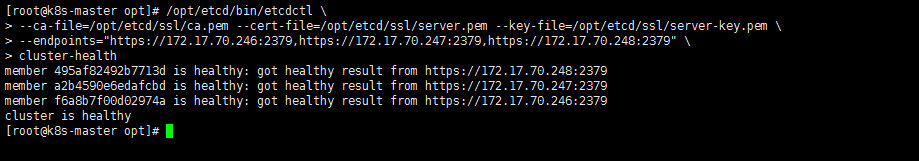

1 | # 查看集群状态 需要指定3个证书哦 |

1 | # 端口检查 |

部署 Master Node

生成apiserver证书

1 | # 查看目录 |

1 | [root@k8s-master k8s]# vim server-csr.json |

1 | # 执行脚本 生成证书 |

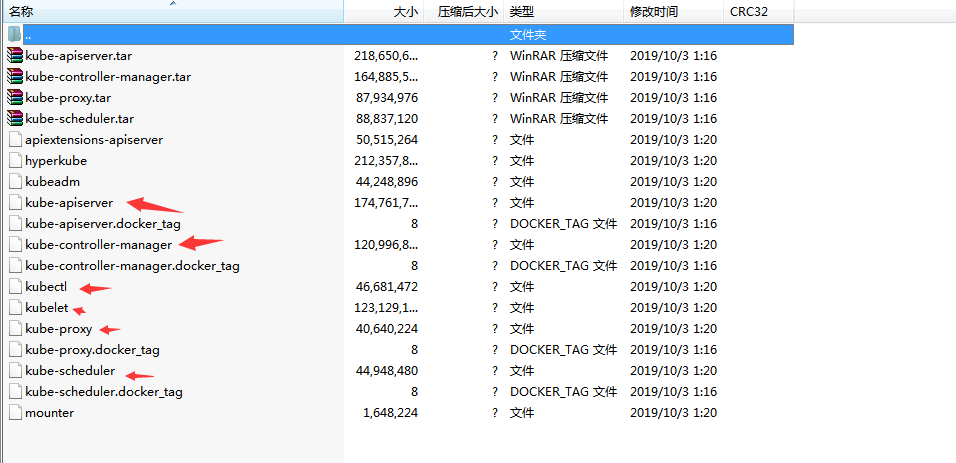

部署 apiserve controller-manager和scheduler

- 在Master节点完成以下操作

- 配置文件 -> systemd管理组件 -> 启动

- 二进制包下载地址

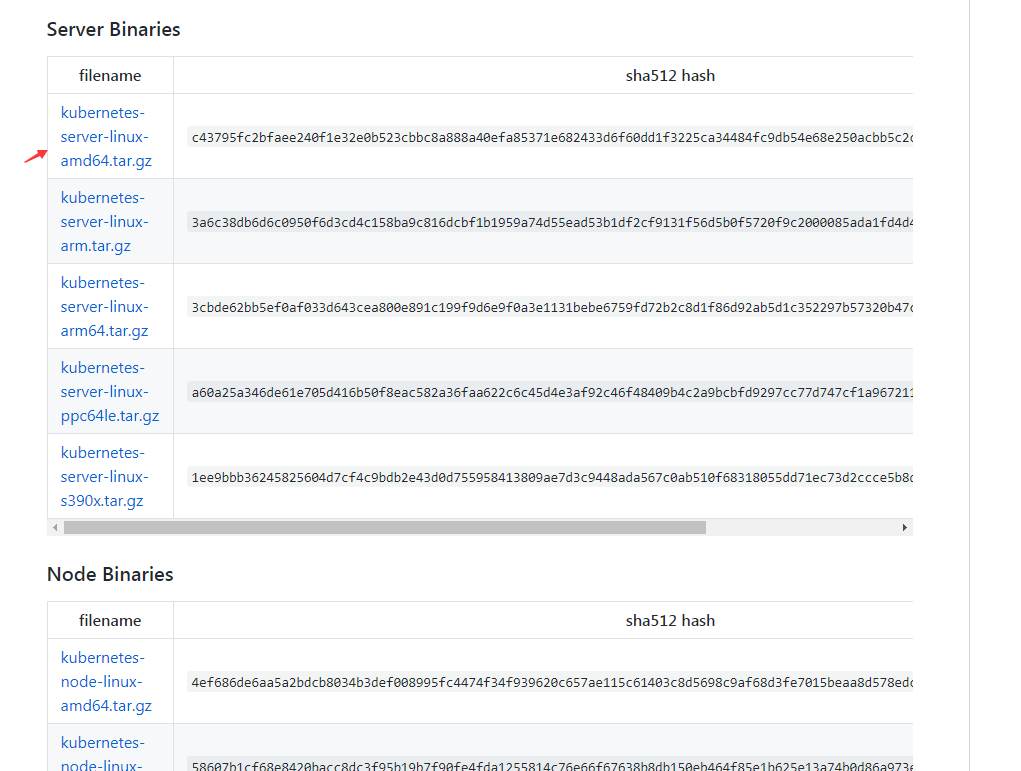

1 | # 下载Server Binaries node的也在里面 |

1 | # 将需要使用的包 替换 如master节点 |

解压 k8s-master.tar.gz

1 | [root@k8s-master opt]# ls -l /opt/k8s-master.tar.gz |

1 | # 服务启动文件 注意服务配置的目录 |

1 | [root@k8s-master opt]# tree kubernetes/ |

配置证书

1 | # copy证书 |

配置文件

1 | # 官方文档 |

kube-apiserver.conf

1 | [root@k8s-master cfg]# vim kube-apiserver.conf |

kube-controller-manager.conf

1 | # 官方文档 |

1 | [root@k8s-master cfg]# vim kube-controller-manager.conf |

kube-scheduler.conf

1 | [root@k8s-master cfg]# vim kube-scheduler.conf |

启动服务

1 | # 启动服务加载 |

1 | systemctl start kube-apiserver |

1 | [root@k8s-master opt]# systemctl start kube-apiserver |

1 | # 将kubectl 放入/usr/bin 方便使用 |

1 | # 查看集群状态 过会回更新成功 能看到即可 |

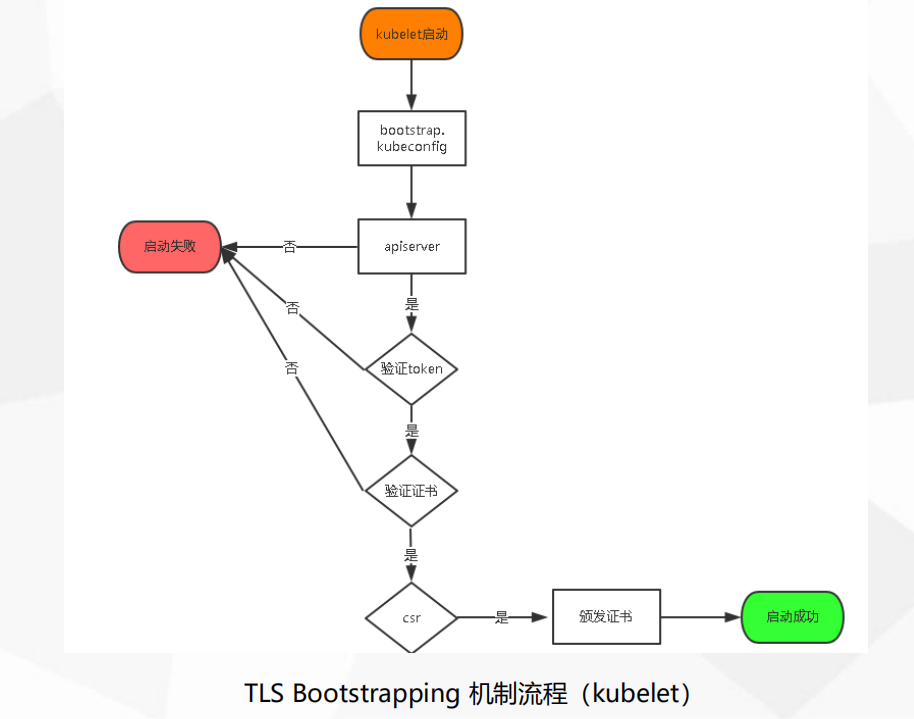

启用 TLS Bootstrapping

1 | [root@k8s-master cfg]# cat kube-apiserver.conf |grep bootstrap |

部署 Worker Node

部署docker

1 | Docker 二进制包下载地址:https://download.docker.com/linux/static/stable/x86_64/ |

1 | [root@k8s-master opt]# scp k8s-node.tar.gz root@172.17.70.247:/opt |

1 | # 二进制安装docker |

配置 kubelet 和 kube-proxy

1 | [root@k8s-node1 opt]# tree kubernetes/ |

1 | [root@k8s-node1 cfg]# cd /opt/kubernetes/cfg |

1 | # bootstrap 是 k8s为了解决kubelet 很多 手动办法证书,自动能为加入到集群的node 办法证书 |

1 | [root@k8s-node1 cfg]# vim kube-proxy.conf |

1 | # 连接apiserver |

1 | [root@k8s-node1 cfg]# vim kube-proxy-config.yml |

配置启动文件

1 | [root@k8s-node1 opt]# mv *.service /usr/lib/systemd/system |

证书拷贝

1 | [root@k8s-master k8s]# cd /opt/TLS/k8s/ |

启动服务

1 | [root@k8s-node1 cfg]# systemctl start kubelet |

1 | # 需要允许给node办法证书 |

允许给Node颁发证书

1 | # 查看请求 |

1 | # 别忘记还要部署另外一套节点 node2 |

TLS Bootstrapping 机制流程(kubelet)

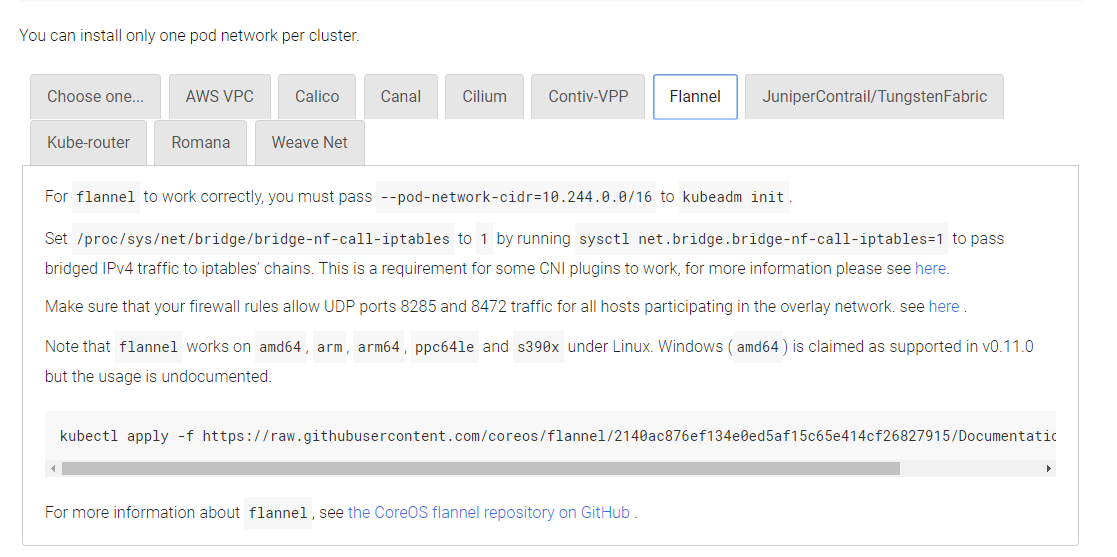

部署 CNI 网络 (Flannel)

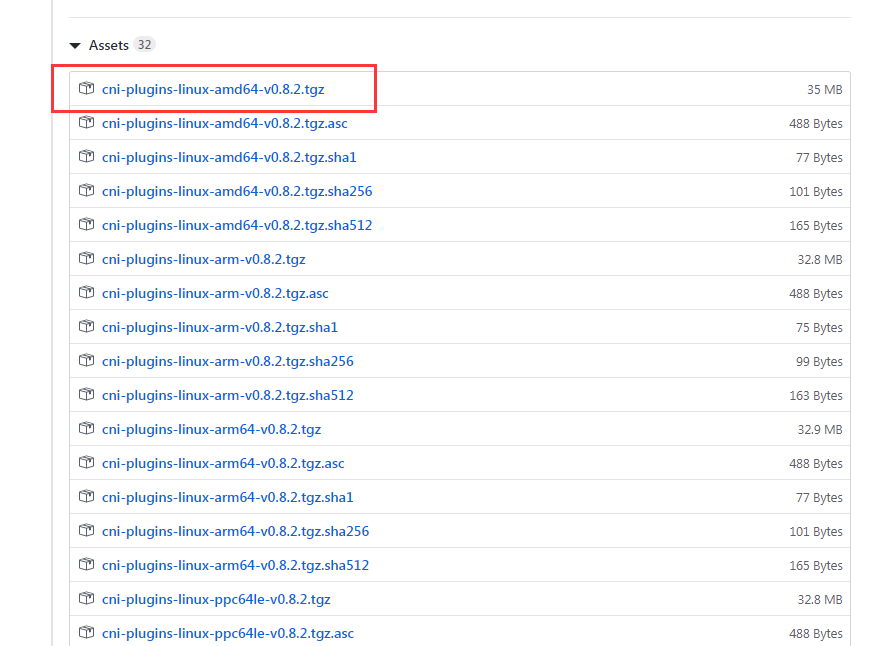

1 | # 二进制包下载地址:https://github.com/containernetworking/plugins/releases |

1 | [root@k8s-node1 kubernetes]# tail /opt/kubernetes/logs/kubelet.INFO |

1 | [root@k8s-node1 opt]# mkdir -p /opt/cni/bin # 二进制文件目录 kubelet使用 为pod创建网络 |

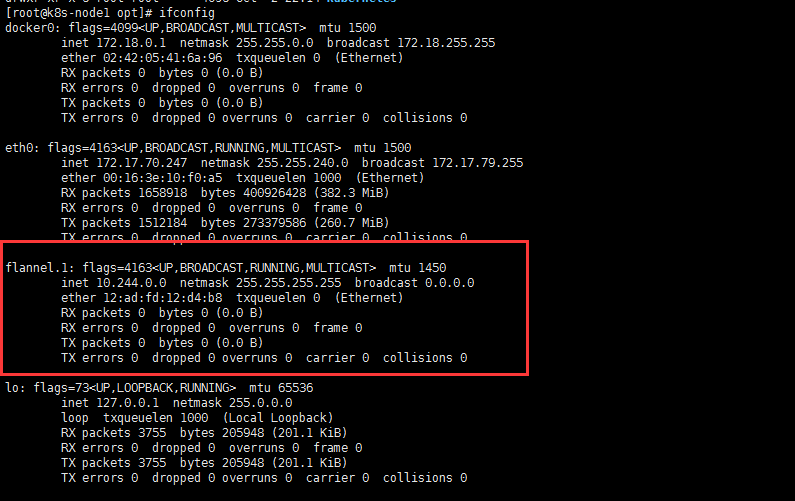

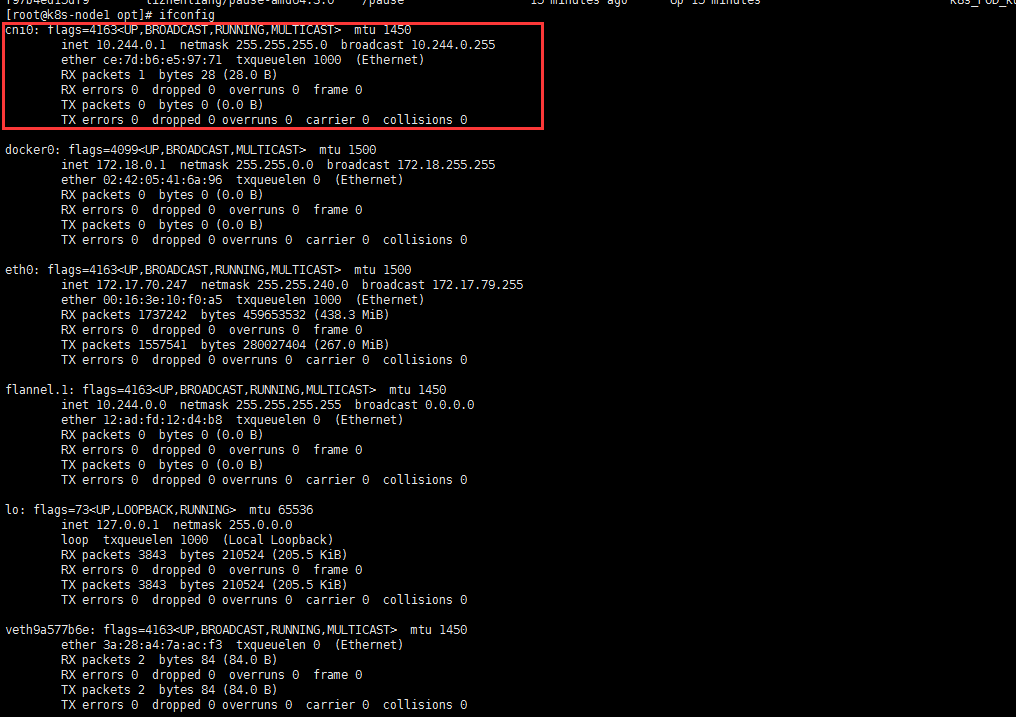

部署 flannel

1 | # 在master部署 |

1 | # 上传 |

1 | # 启动 pod 查看pod是否启动 |

1 | # 查看pod日志 |

1 | # 每个node上都会启动个pod |

创建个nginx pod 试试

1 | [root@k8s-master opt]# kubectl create deployment web --image=nginx:1.16 |

部署K8S内部DNS服务(CoreDNS)

- 作用: 对service 提供dns解析

1 | [root@k8s-master ~]# kubectl get svc |

1 | # 固定的IP |

1 | # 部署 |

1 | # 创建busybox容器 |

K8S 高可用介绍

1 | LB : Nginx LVS HaProxy |

部署master2

1 | 172.17.70.245 k8s-master2 |

1 | # 将kubernetes 传过去 |

1 | # 修改配置文件 |

1 | # 传递 kubectl |

1 | # 启动服务 |

1 | # 查看资源 有数据即为正常 |

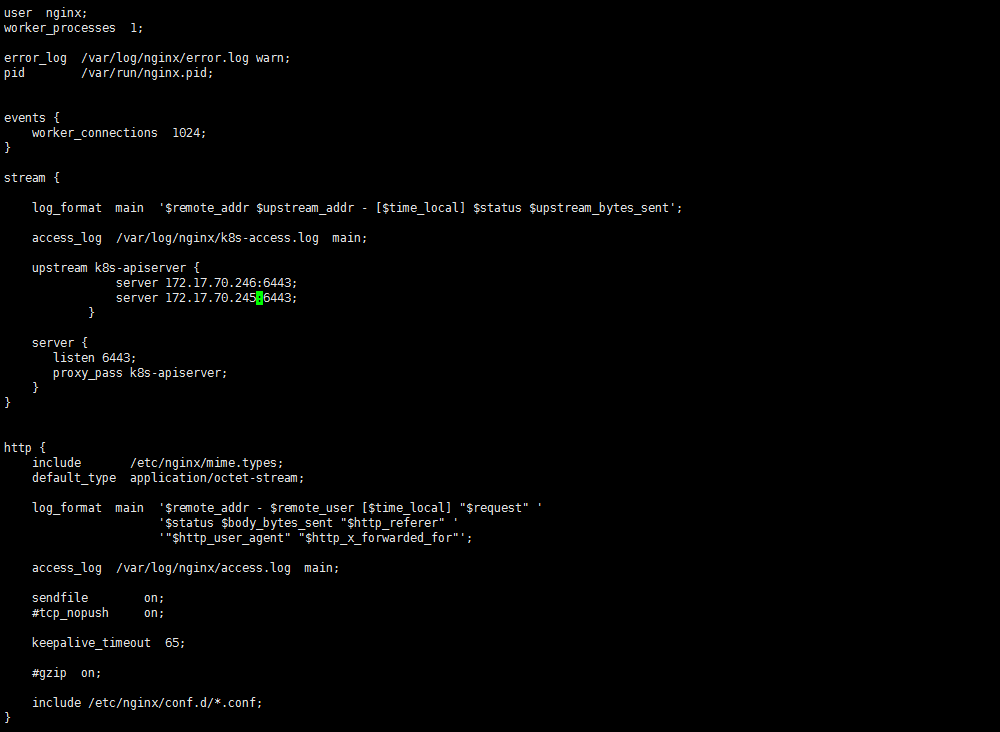

部署 Nginx 负载均衡

1 | 172.17.70.245 k8s-master2 |

1 | # 下载rpm包 |

1 | # 两台nginx 都要完成 |

1 | # 启动 |

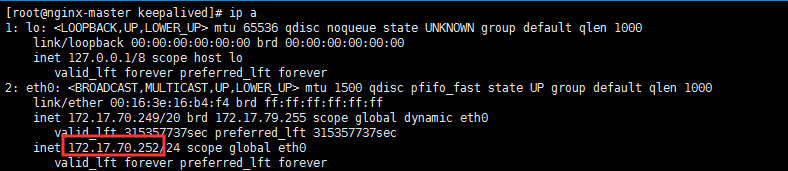

安装 keepalived 心跳检测

1 | [root@nginx-master ~]# yum install keepalived -y |

1 | # 编辑配置文件 |

1 | # 检查脚本 |

1 | # 备机配置文件 |

1 | # 启动keepalived |

修改Node连接VIP

1 | # 将Node连接VIP: |

1 | # 重启node服务 |