Pod 简介

1 | • 最小部署单元 |

Pod容器分类

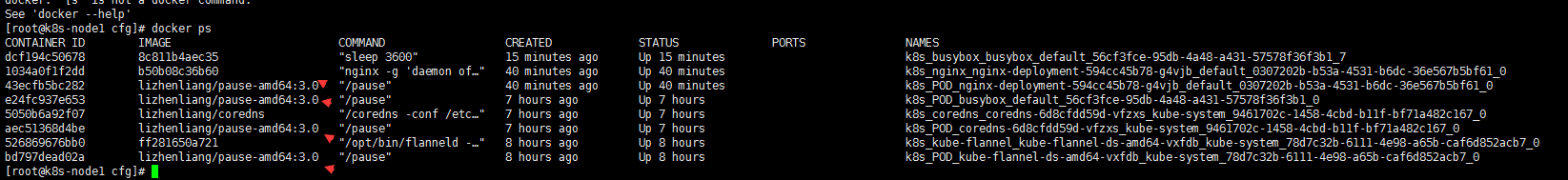

1 | • Infrastructure Container:基础容器 |

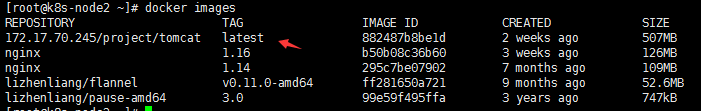

- 基础容器会启动,他是透明的但是可以在node节点使用docker images查看到

- 应该把pause-amd64:3.0下载下来保存到私有仓库,将地址替换成私有仓库地址

- 每次创建一个pod,都会创建一个这个容器,他的作用是将pod里面的所有容器放到一个命名空间

1 | [root@k8s-node1 cfg]# cat /opt/kubernetes/cfg/kubelet.conf |

Pod 实现机制

1 | 1. 共享网络 |

共享网络

1 | # 1. 创建pod后在创建业务容器之前会先创建基础容器pause-amd64:3.0 |

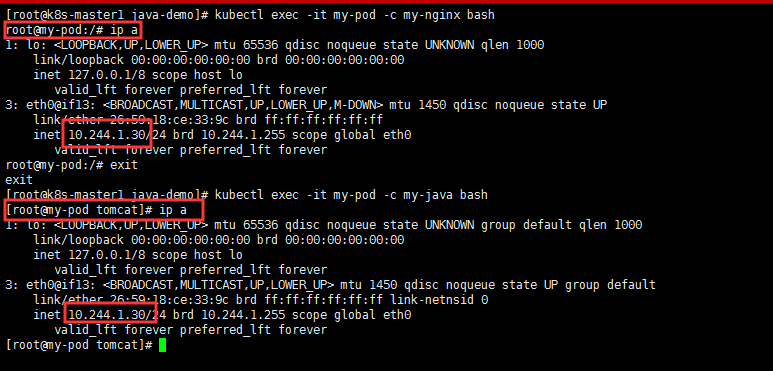

1 | # 1个POD里面有多个容器 他们共享一个网络命名空间 |

1 | # 导出一个正在运行的Pod修改 |

1 | # 创建 |

1 | # 验证是否同一个网络命名空间 |

共享存储

1 | 1. Pod 为亲密性应用而存在 |

1 | 1. 共享存储不能使用 同一个存储的命名空间 |

1 | 1. POD 持久化数据: |

同一Pod下的容器共享数据

1 | # 1. emptyDir |

1 | # 创建了两个容器 read 和 write |

Pod 存在的意义

Pod 镜像拉取策略

- imagePullPolicy

1 | • IfNotPresent:默认值,镜像在宿主机上不存在时才拉取 |

1 | # 导出的yaml文件中 默认值就是IfNotPresent |

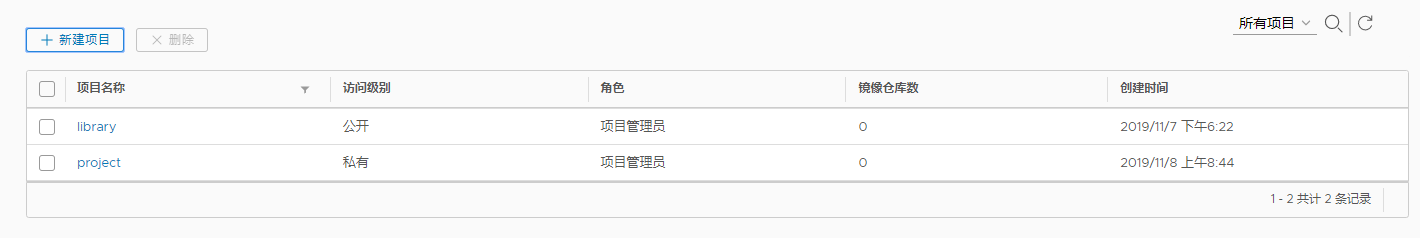

拉取私有仓库镜像 harbord的认证凭据

配置可信任

1 | [root@k8s-node1 ~]# cat /etc/docker/daemon.json |

1 | # 镜像仓库 上传一个镜像 |

配置k8s认证

- docker主机虽然login 但是不代表k8s也是通过认证的,她不是登录的状态

- 因为私有仓库下载需要凭证

1 | # 参考文档 |

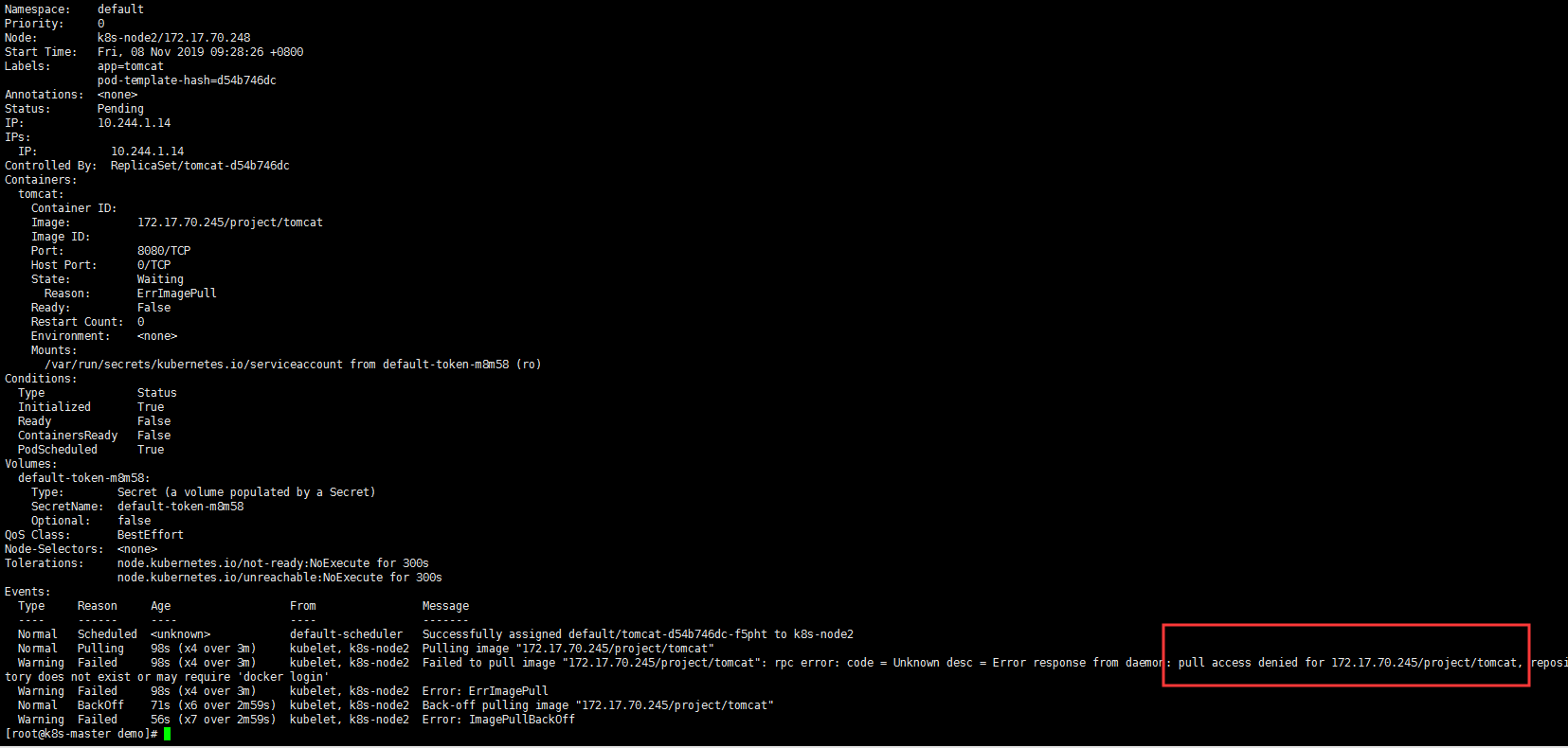

测试k8s无凭证拉取私有仓库镜像

- docker 与 k8s 登录私有仓库的凭证 不是一套

1 | # 创建一个tomcat.yaml |

1 | [root@k8s-master demo]# kubectl apply -f tomcat-deployment.yaml |

1 | # 镜像无法拉取 |

查看pod事件

1 | [root@k8s-master demo]# kubectl describe pod tomcat-d54b746dc-f5pht |

配置凭据

1 |

|

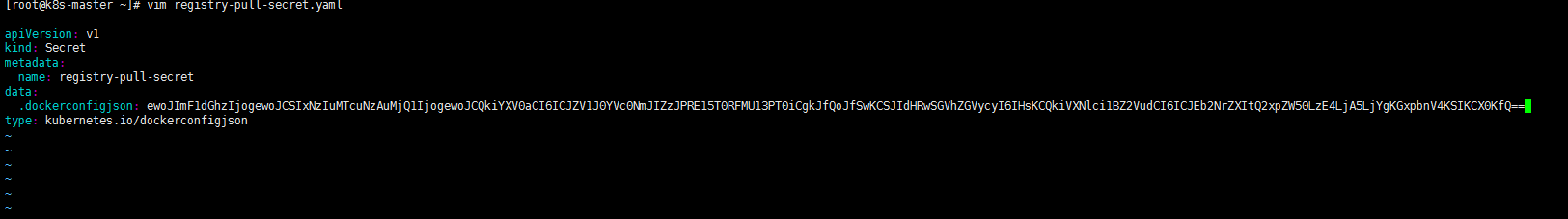

1 | # Secret 配置 |

1 | [root@k8s-master ~]# kubectl create -f registry-pull-secret.yaml |

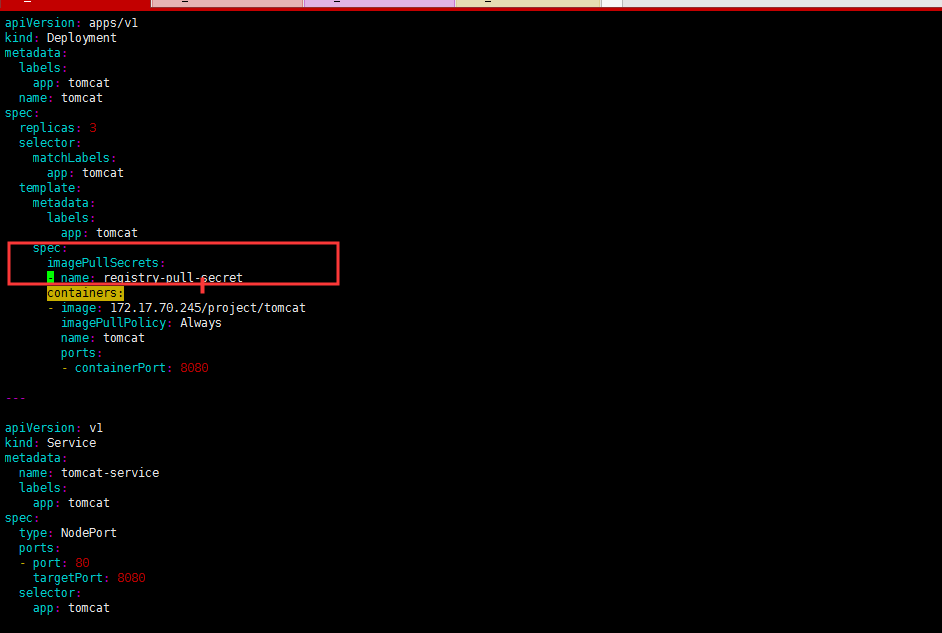

1 | # 配置凭据 |

1 | # 创建 |

1 | # 可以创建啦 |

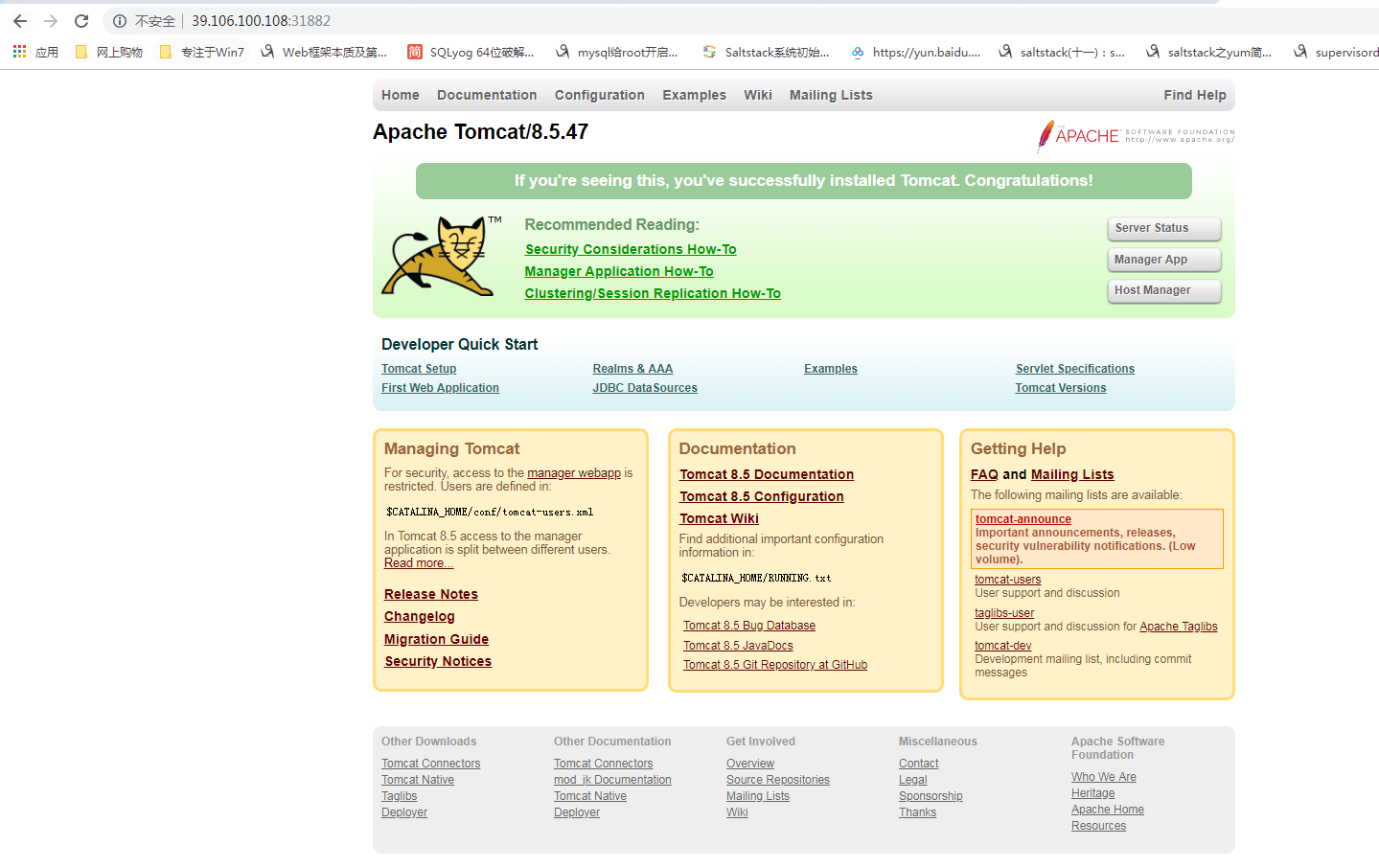

1 | # 测试访问 |

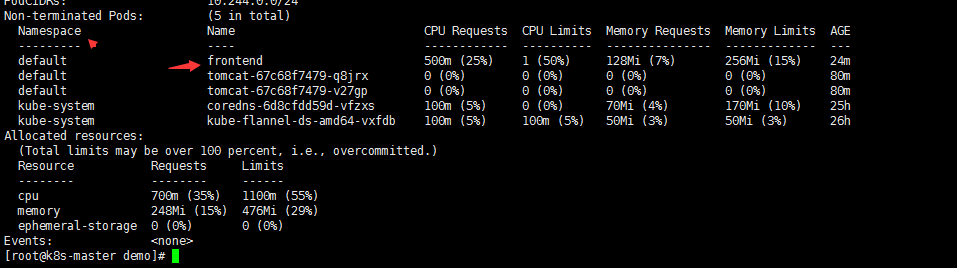

Pod 资源限制

- Pod和Container的资源请求和限制:

- 不能让容器占用所有的物理资源

1 | # 官方示例 |

1 | • spec.containers[].resources.limits.cpu |

1 | limits 资源的总限制 |

1 | # 调用了docker自身限制 |

1 | [root@k8s-master demo]# vim pod2.yaml |

1 | [root@k8s-master demo]# kubectl apply -f pod2.yaml |

1 | [root@k8s-master demo]# kubectl get pod |

1 | # 查看pod日志 pod中两个容器会有选择 |

检查节点容量和分配的数量

1 | # kubectl describe nodes k8s-node1 |

Pod 重启策略

- 再K8S中 POD 是没有重启的概念,每次都是重建

- jod 计划任务 都是一次性的 不适合 Always

- Always 适合 web服务 一直在运行 挂了再拉起一个

1 | • Always:当容器终止退出后,总是重启容器,默认策略。 |

异常退出重启

1 | [root@k8s-master demo]# kubectl edit deployment tomcat |

1 | [root@k8s-master demo]# kubectl apply -f pod3.yaml |

改成正常退出不重启

1 | [root@k8s-master demo]# vim pod3.yaml |

1 | [root@k8s-master demo]# kubectl delete -f pod3.yaml |

根据yaml文件删除pod

1 | [root@k8s-master demo]# kubectl delete -f pod3.yaml |

Pod 健康检查 probes探针

1 | https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/ |

- service endpoints 就是service关联的pod的ip地址和端口

- 健康检查的目的 就是再服务出现问题的情况下 能够重启pod

1 |

|

- Probe有以下两种类型

1 | 1. livenessProbe |

- Probe支持以下三种检查方法

1 | 1. httpGet |

健康检查示例

1 | [root@k8s-master demo]# vim pod4.yaml |

1 | [root@k8s-master demo]# kubectl apply -f pod4.yaml |

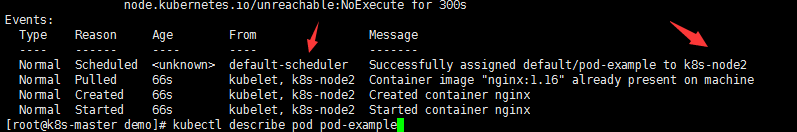

Pod 调度约束

- 让pod调度到指定的节点上

- 比如我们有很多node节点 ,希望根据部门区分,A部门使用node 123,B部门使用node 456

- 默认的调度规则是根据资源利用率,做打分

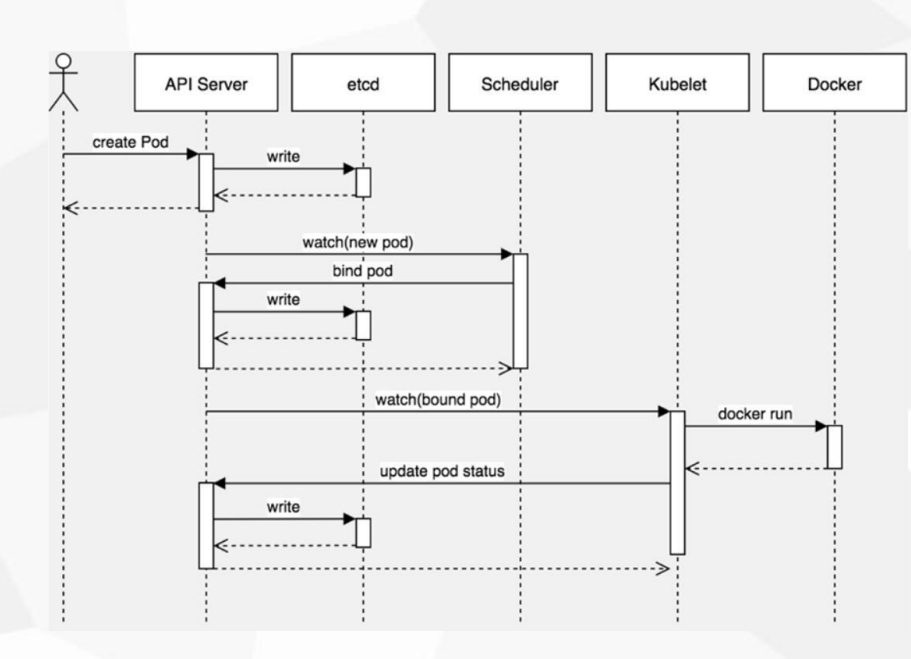

pod工作流程图

- 用户在命令行创建pod请求发送给 apiserver,

- apiserver收到请求,写入到 etcd中,里面记录了用户请求要创建的pod属性,

- scheduler 调度器 watch 获取 etcd中有新pod需要创建,

- scheduler 调度器通过算法判断交给哪个节点创建,并更新给etcd,etcd记录要调配到哪个node上,

- kubelet 通过 watch 从etcd中,获取哪个pod要绑定到自己node中,

- kubelet 拿到pod要创建的信息后,通过 docker run启动容器,将启动的Pod状态更新到etcd中,

- 最后 kubectl get pod 请求 apiserver 从etcd中拿到 pod的状态

- 如果创建的 deployment 还需要控制器参与处理

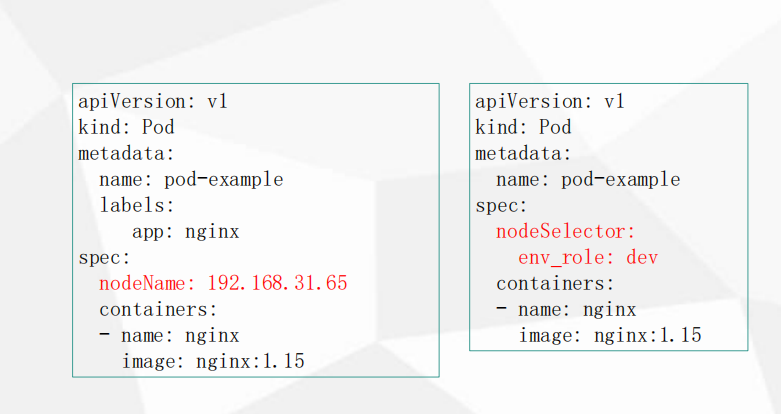

调度约束

- 两个字段指定

1 | 1. nodeName用于将Pod调度到指定的Node名称上 |

指定node创建pod

1 | [root@k8s-master demo]# vim pod5.yaml |

通过yaml一次性删除所有pod

1 | [root@k8s-master demo]# cd /opt/demo/ |

nodeSelector 按照标签调度

- 先给node打标签

- node pod 都可以设计标签

1 | [root@k8s-master demo]# kubectl get nodes |

1 | [root@k8s-master demo]# vim pod5.yaml |

1 | [root@k8s-master demo]# kubectl delete -f . |

Pod 故障排查

- 理清思路

1 | # 官方手册 |

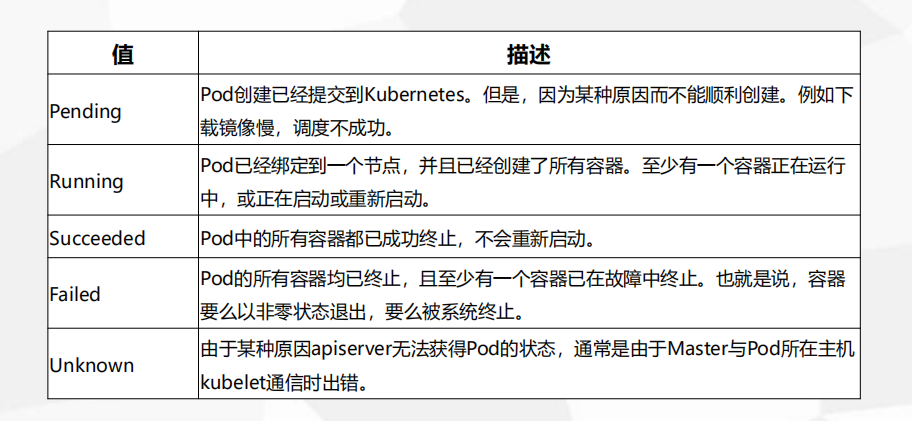

pod 的状态

1 | # STATUS |

查看 pod 事件

1 | # Pending 因为某种原因而不能顺利创建 例如 下载镜像慢或者调度不成功 |

查看 pod 日志

1 | # kubectl logs "podname" -n "namespace" |

进入pod中的容器

1 | kubectl describe TYPE/NAME |

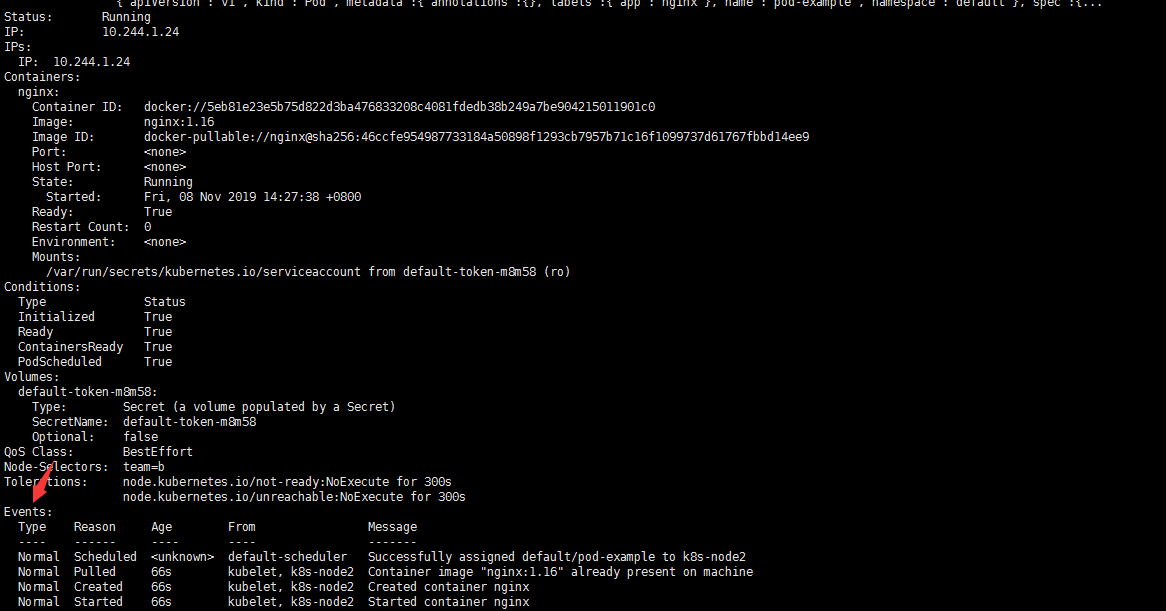

1 | [root@k8s-master demo]# kubectl exec -it pod-example bash |

1 | # running状态 但是服务没有正常启动 可以进入容器查看 |