为什么需要 Helm?

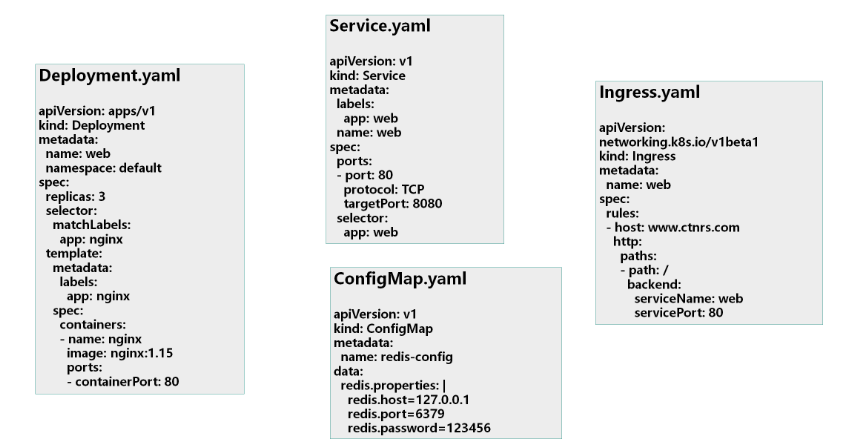

- K8S上的应用对象,都是由特定的资源描述组成,包括deployment、service等。

- 都保存各自文件中或者集中写到一个配置文件。然后kubectl apply –f 部署。

- 如果应用只由一个或几个这样的服务组成,上面部署方式足够了。

- 而对于一个复杂的应用,会有很多类似上面的资源描述文件,例如微服务架构应用,组成应用的服务可能多达十个,几十个。

- 如果有更新或回滚应用的需求,可能要修改和维护所涉及的大量资源文件,而这种组织和管理应用的方式就显得力不从心了。

- 且由于缺少对发布过的应用版本管理和控制,使Kubernetes上的应用维护和更新等面临诸多的挑战,主要面临以下问题:

- 如何将这些服务作为一个整体管理

- 这些资源文件如何高效复用

- 不支持应用级别的版本管理

Helm 介绍

- Helm是一个Kubernetes的包管理工具,就像Linux下的包管理器,如yum/apt等,可以很方便的将之前打包好的yaml文件部署到kubernetes上。

Helm有3个重要概念:

helm:一个命令行客户端工具,主要用于Kubernetes应用chart的创建、打包、发布和管理。

Chart:应用描述,一系列用于描述 k8s 资源相关文件的集合。

- Release:基于Chart的部署实体,一个 chart 被 Helm 运行后将会生成对应的一个 release;将在k8s中创建出真实运行的资源对象。

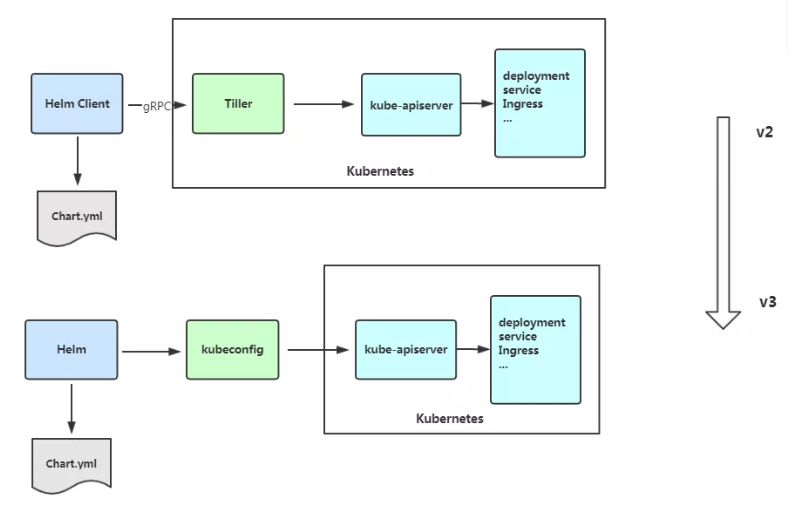

Helm v3 变化

- 2019年11月13日, Helm团队发布

Helm v3的第一个稳定版本。 - 该版本主要变化如下:

架构变化

- 最明显的变化是

Tiller的删除 Release名称可以在不同命名空间重用- 支持将 Chart 推送至 Docker 镜像仓库中

- 使用JSONSchema验证chart values

- 其他

1 | 1. 为了更好地协调其他包管理者的措辞 `Helm CLI `个别更名 |

Helm 客户端

部署 Helm 客户端

- Helm客户端下载地址:https://github.com/helm/helm/releases

- 解压移动到/usr/bin/目录即可。

1 | wget https://get.helm.sh/helm-v3.0.0-linux-amd64.tar.gz |

Helm 常用命令

| 命令 | 描述 |

|---|---|

| create | 创建一个chart并指定名字 |

| dependency | 管理chart依赖 |

| get | 下载一个release。可用子命令:all、hooks、manifest、notes、values |

| history | 获取release历史 |

| install | 安装一个chart |

| list | 列出release |

| package | 将chart目录打包到chart存档文件中 |

| pull | 从远程仓库中下载chart并解压到本地 # helm pull stable/mysql –untar |

| repo | 添加,列出,移除,更新和索引chart仓库。可用子命令:add、index、list、remove、update |

| rollback | 从之前版本回滚 |

| search | 根据关键字搜索chart。可用子命令:hub、repo |

| show | 查看chart详细信息。可用子命令:all、chart、readme、values |

| status | 显示已命名版本的状态 |

| template | 本地呈现模板 |

| uninstall | 卸载一个release |

| upgrade | 更新一个release |

| version | 查看helm客户端版本 |

配置国内 Chart 仓库

- 微软仓库(http://mirror.azure.cn/kubernetes/charts/) 这个仓库强烈推荐,基本上官网有的chart这里都有。

- 阿里云仓库(https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts )

- 官方仓库(https://hub.kubeapps.com/charts/incubator) 官方chart仓库,国内有点不好使。

1 | helm repo add stable http://mirror.azure.cn/kubernetes/charts |

1 | [root@k8s-master1 ~]# helm repo add stable http://mirror.azure.cn/kubernetes/charts |

1 | # 查看仓库中所有chart |

添加多个仓库

1 | [root@k8s-master1 ~]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts |

删除存储库

1 | [root@k8s-master1 ~]# helm repo remove aliyun |

Helm 基本使用

主要介绍三个命令:

chart install

chart update

chart rollback

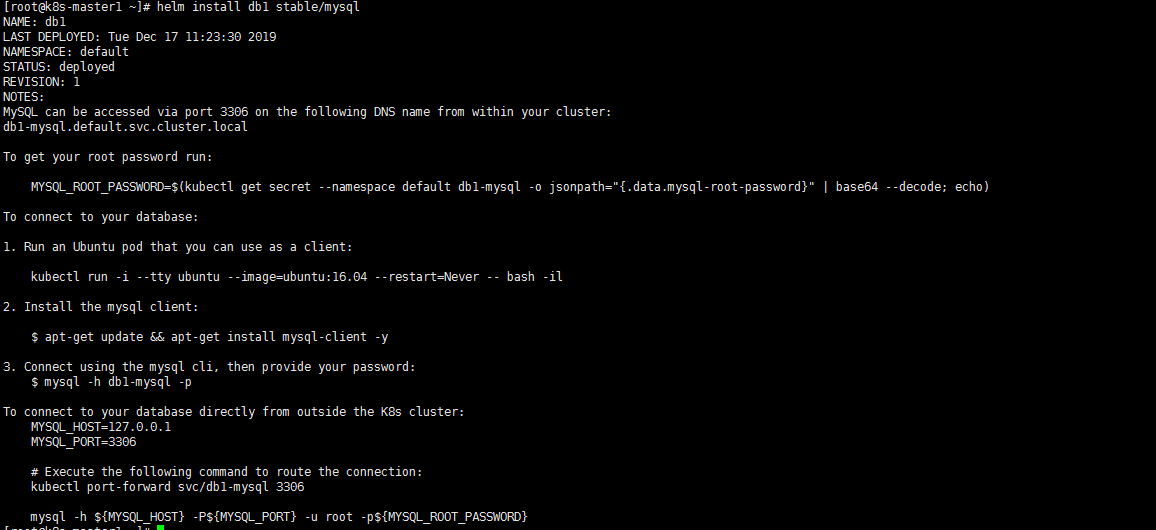

使用 chart 部署一个应用

1 | # 查找chart |

1 | # 查看chart信息 |

1 | # 安装 db1 是 Release 的名称 |

1 | # 查看部署状态 |

查看状态

1 | # 查看发布状态 |

1 | # 查看pod状态 |

1 | # 查看事件 |

1 | # 绑定pvc的方法: |

1 | # 查看pvc |

1 | # 创建一个pvc匹配到 db1-mysql |

1 | [root@k8s-master1 ~]# kubectl get pvc db1-mysql -o yaml |

创建 pv

1 | # 模板 |

1 | # nfs主机上创建目录 |

1 | # 创建 pv |

1 | [root@k8s-master1 pv]# kubectl apply -f pv.yaml |

登录测试

1 | # 由于网络原因 就不使用官网容器测试了 直接登录测试 |

使用自己的 nfs pv自动供给

- 修改chart的部署选项

- 上面部署的mysql一开始并没有成功,这是因为并不是所有的chart都能按照默认配置运行成功,可能会需要一些环境依赖,例如PV。

- 所以我们需要自定义chart配置选项,安装过程中有两种方法可以传递配置数据:

- –values(或-f):指定带有覆盖的YAML文件。这可以多次指定,最右边的文件优先

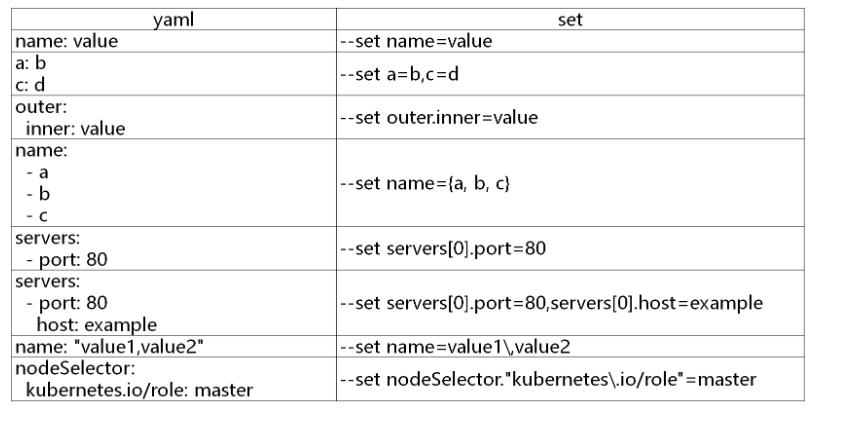

- –set:在命令行上指定替代。如果两者都用,–set优先级高

values 使用

- 先将修改的变量写到一个文件中

1 | [root@k8s-master1 pv]# helm show values stable/mysql > values.yaml # 后面看默认的位置 |

1 | # 以上将创建具有名称的默认MySQL用户k8s,并授予此用户访问新创建的k8s数据库的权限,但将接受该图表的所有其余默认值。 |

1 | # 小总结: |

set 使用

1 | [root@k8s-master1 pv]# helm install db3 --set persistence.storageClass="managed-nfs-storage" stable/mysql |

1 | # set传入需要遵循语法 values 结构化数据 |

拉取整个chart包

1 | # --untar 拉取后直接解压 |

helm install 命令可以从多个来源安装

- chart存储库

- 本地chart存档(helm install foo-0.1.1.tgz)

- chart目录(helm install path/to/foo)

- 完整的URL(helm install https://example.com/charts/foo-1.2.3.tgz)

构建一个 Helm Chart

自动生成目录

1 | [root@k8s-master1 ~]# helm create mychart |

1 | # 启动这个默认自动创建的 mychart 会发现是一个 nginx服务 |

1 | # 文件内容: |

简单制作一个 chart

创建

1 | # 将文件和目录清空 |

1 | # 创建nginx的 deployment |

模板

- Helm最核心的就是模板,即模板化的K8S manifests文件。

- 它本质上就是一个Go的template模板。Helm在Go template模板的基础上,还会增加很多东西。

- 如一些自定义的元数据信息、扩展的库以及一些类似于编程形式的工作流,例如条件语句、管道等等。这些东西都会使得我们的模板变得更加丰富。

1 | # 修改成 动态模板 |

1 | # 加入变量 引入名字需要一致 |

1 | # 安装 |

升级

- 发布新版本的chart时,或者当您要更改发布的配置时,可以使用该

helm upgrade命令。

1 | # 修改values.yaml |

回滚

- 如果在发布后没有达到预期的效果,则可以使用

helm rollback回滚到之前的版本。

1 | # 回滚到上一个版本 |

1 | # 回滚到指定版本 |

卸载

1 | # 卸载 |

打包

1 | # 可以打包推送的charts仓库共享别人使用。 |

深入学习 Helm

Chart 模板

- Helm最核心的就是模板,即模板化的K8S manifests文件。

- 它本质上就是一个Go的template模板。Helm在Go template模板的基础上,还会增加很多东西。

- 如一些自定义的元数据信息、扩展的库以及一些类似于编程形式的工作流,例如条件语句、管道等等。这些东西都会使得我们的模板变得更加丰富。

1 | [root@k8s-master1 ~]# helm create app01 |

1 | # 清除默认生成的模板文件 |

1 | # 创建deployment 和 service |

1 | # 直接部署 |

1 | # helm 部署 |

动态使用模板

使用内置对象

| Release.Name | release 名称 |

|---|---|

| Release.Name | release 名字 |

| Release.Namespace | release 命名空间 |

| Release.Service | release 服务的名称 |

| Release.Revision | release 修订版本号,从1开始累加 |

1 | # 部署另外的应用 修改标签选择器和镜像名称 |

1 | # 通过模板渲染 |

1 | [root@k8s-master1 templates]# vim service.yaml |

1 | # 修改 values.yaml 引用变量的值 |

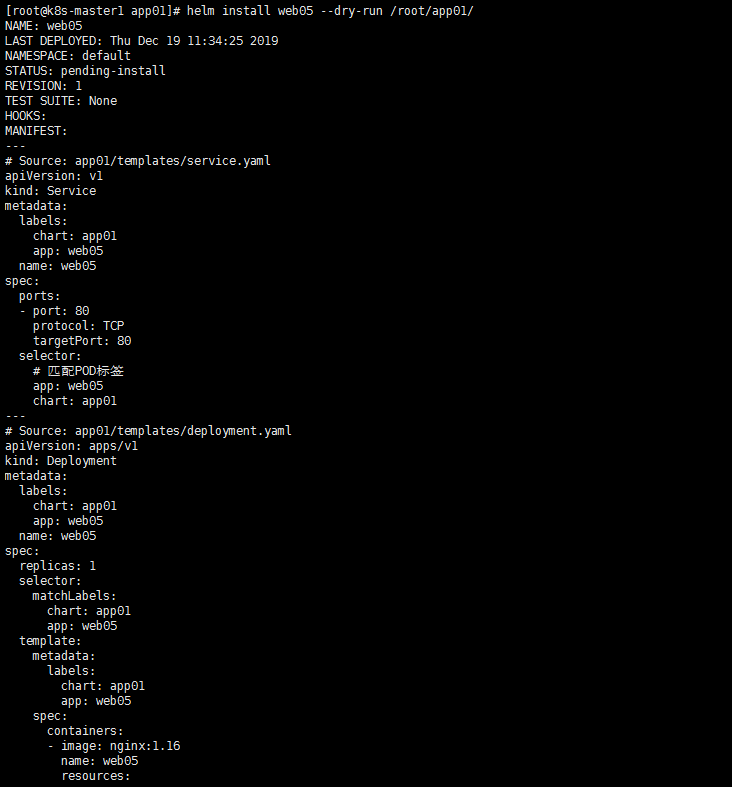

调试验证

- Helm也提供了

--dry-run --debug调试参数,帮助你验证模板正确性。 - 在执行

helm install时候带上这两个参数就可以把对应的values值和渲染的资源清单打印出来,而不会真正的去部署一个release。

1 | [root@k8s-master1 app01]# helm install web --dry-run /root/app01/ |

1 | # 执行验证 |

1 | # 升级更新 |

使用通用模板 创建新的POD

1 | [root@k8s-master1 app01]# vim values.yaml |

Values

- Values对象是为Chart模板提供值,这个对象的值有4个来源:

- chart 包中的 values.yaml 文件

- 父 chart 包的 values.yaml 文件

- 通过 helm install 或者 helm upgrade 的

-f或者--values参数传入的自定义的 yaml 文件 - 通过

--set参数传入的值

- chart 的 values.yaml 提供的值可以被用户提供的 values 文件覆盖,而该文件同样可以被

--set提供的参数所覆盖。

1 | # 通过set传值创建 |

管道与函数

1 | # quote 函数增加双引号 |

1 | # default 默认值 |

其他函数:

1 | 缩进:{{ .Values.resources | indent 12 }} |

流程控制

Helm模板语言提供以下流程控制语句:

1 | # 满足更复杂的数据逻辑处理 |

if … else

1 | [root@k8s-master1 templates]# vim deployment.yaml |

1 | # 去除空行 加上 - |

修改回实例变量

1 | # 使用默认模板 |

1 | [root@k8s-master1 app01]# vim values.yaml |

1 | # 修改deployment和service文件的变量引用 |

1 | # 测试 |

资源限制判断

1 | # 修改 values 增加资源限制 |

1 | # 修改 deployment |

1 | [root@k8s-master1 app01]# helm install web05 --dry-run /root/app01/ |

1 | [root@k8s-master1 app01]# kubectl get pods -o wide |

设置开关

1 | # 设置开关 判断 enable: false | true |

1 | [root@k8s-master1 app01]# vim templates/deployment.yaml |

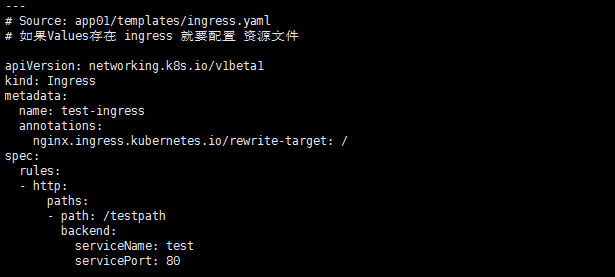

ingress 开关

1 | # 如果Values存在ingress 就进行资源配置 否则不进行 |

1 | # 启用 如果是false则不会创建 |

1 | [root@k8s-master1 app01]# helm install web /root/app01/ |

with 控制变量作用域

1 | [root@k8s-master1 app01]# vim values.yaml |

1 | # 正常写法 |

1 | # 使用 with |

1 | # with + toYaml + 缩进 |

range 循环

- 在 Helm 模板语言中,使用

range关键字来进行循环操作。 - 循环内部我们使用的是一个

.,这是因为当前的作用域就在当前循环内,这个.引用的当前读取的元素。

1 | test: |

1 | # Source: app01/templates/configmap.yaml |

变量

- 在with中使用内置变量

- 直接使用$引用

1 | # 在with里面引用内置变量会报错 找不到 |

1 | # 使用$引用内置变量 |

1 | # 在with上面定义变量 |

1 | # range 使用变量 |

1 | # 引用变量 错误引用 |

1 | # 正确引用 |

命名模板

- 命名模板:使用define定义,template引入,在templates目录中默认下划线_开头的文件为公共模板(_helpers.tpl)

- 重复使用的代码块 放到命名模板

1 | # 定义 |

1 | # 引用 |

1 | # include 支持函数处理 |

开发自己的 Chart Java应用实例

- 先创建模板

1 | helm create demo |

- 修改Chart.yaml,Values.yaml,添加常用的变量

- 在templates目录下创建部署镜像所需要的yaml文件,并变量引用yaml里经常变动的字段

创建模板目录

1 | [root@k8s-master1 opt]# cd /opt/ |

修改 Chart.yaml

1 | [root@k8s-master1 demo]# vim Chart.yaml |

修改 Values.yaml

1 | # 只保留用到的变量 |

准备应用 yaml 文件

1 | [root@k8s-master1 templates]# rm -rf tests/ |

1 | # 三个公共模板 |

1 | # 打包 |