Volume & PersistentVolume

1 | 官方文档 |

- Kubernetes中的Volume提供了在容器中挂载外部存储的能力

- Pod需要设置卷来源(spec.volume)和挂载点(spec.containers.volumeMounts)两个信息后才可以使用相应的Volume

- 本地数据卷

- hostPath

- emptyDir

Volume 概念

- 容器中的文件在磁盘上是临时存放的,这给容器中运行的特殊应用程序带来一些问题。

- 首先,当容器崩溃时,kubelet 将重新启动容器,容器中的文件将会丢失——因为容器会以干净的状态重建。

- 其次,当在一个 Pod 中同时运行多个容器时,常常需要在这些容器之间共享文件。

- Kubernetes 抽象出 Volume 对象来解决这两个问题。

Volume 支持的类型

- 本地 emptyDir hostPath

- 网络 自建存储 ceph

emptyDir

- 创建一个空卷,挂载到Pod中的容器。

- Pod删除该卷也会被删除,随着pod的生命周期 而存在

- 应用场景:Pod中容器之间数据共享,一个pod中有多个容器,他们之间完成数据共享,不使用数据卷容器之间的文件系统是隔离的,只能看到自己的

- 使用数据卷让容器之间某个目录达到共享

1 | [root@k8s-master demo2]# vim emptydir.yaml |

1 | [root@k8s-master demo2]# kubectl apply -f emptydir.yaml |

hostPath

- 挂载 Node 文件系统上文件或者目录到Pod中的容器。

- 应用场景:Pod中容器需要访问宿主机文件

- hostPath 有点像 Bind Mounts

- emptyDir 有点像 Volume

1 | [root@k8s-master demo2]# vim hostPath.yaml |

1 | [root@k8s-master demo2]# kubectl apply -f hostPath.yaml |

1 | [root@k8s-master demo2]# kubectl get pod -o wide |

1 | [root@k8s-master demo2]# kubectl exec -it my-pod2 sh |

NFS(网络存储)

- 本地数据卷 只能绑定指定的node上,如果node出现问题,node上的pod会被放到其他node上,数据就无从获取

挂载网络卷 就算拉一起一个新的也能访问到

安装nfs

1 | # 选择master2 作为服务端 |

- 配置服务端的访问路径 启动服务端守护进程

1 | [root@k8s-master2 ~]# vim /etc/exports |

- 配置启动

1 | # k8s帮我们mount 在配置文件里指定,只要安装nfs客户端即可 |

1 | [root@k8s-master demo2]# kubectl apply -f nfs.yaml |

1 | [root@k8s-master demo2]# kubectl get pod |

1 | [root@k8s-master demo2]# kubectl exec -it nginx-nfs-deployment-5b8f7c4d57-9tbgq bash |

1 | # 销毁 |

PersistentVolume 持久存储数据卷

- PersistenVolume(PV):对存储资源创建和使用的抽象,使得存储作为集群中的资源管理。(专业的存储人员来做)

- 静态 手动创建资源

- 动态 自动创建PV

PersistentVolumeClaim(PVC):让用户不需要关心具体的Volume实现细节,只关心用多大的容量。

作用: 将存储资源作为集群的一部分来管理,开发者不用关系如何创造出存储,也不必担心暴露存储的位置。

Pod使用持久卷在任何地点都能访问,即使POD销毁再拉起也能使用。

PV是提供存储容量的,PVC是消费存储的。PV与PVC的关系是绑定,绑定后其他人就无法使用了。

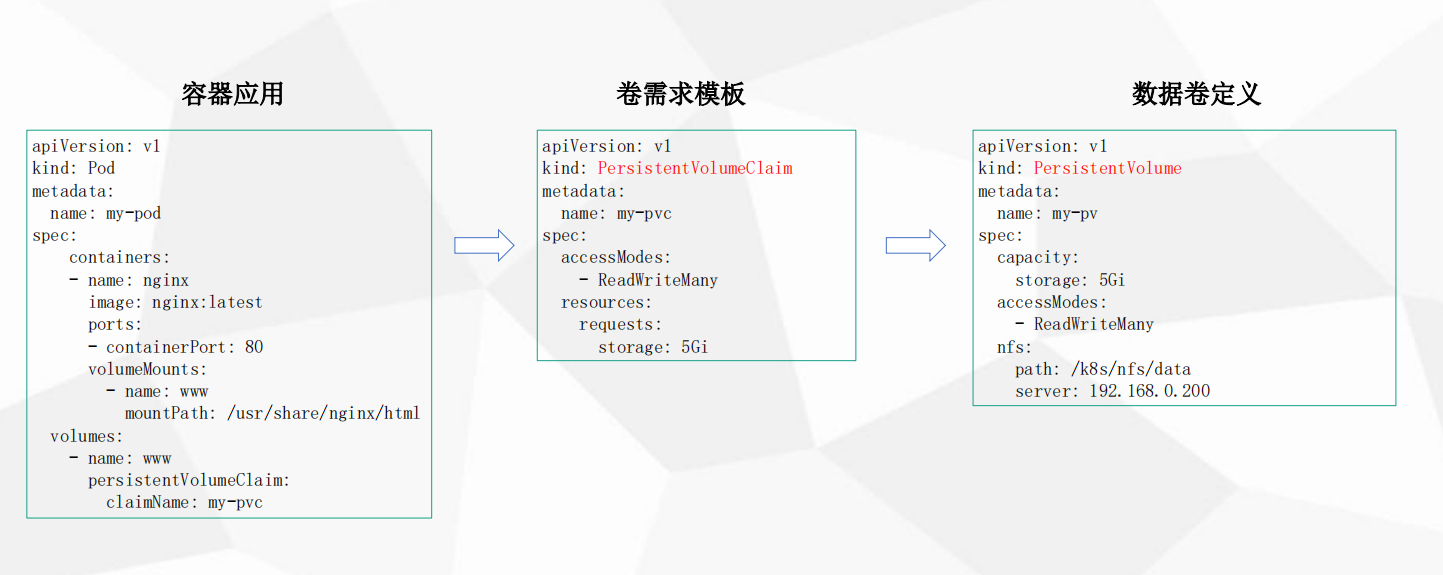

pv 静态供给

- 容器中指定 pvc

- 创建pvc需求模板

- 创建pv

创建 pv

- pv可以是存储人员定义,他们会创建很多pv等待pvc来挂载

1 | [root@k8s-master1 demo]# cat pv-nfs.yaml |

创建 deployment 指定pvc

1 | [root@k8s-master1 demo]# vim nfs-pvc.yaml |

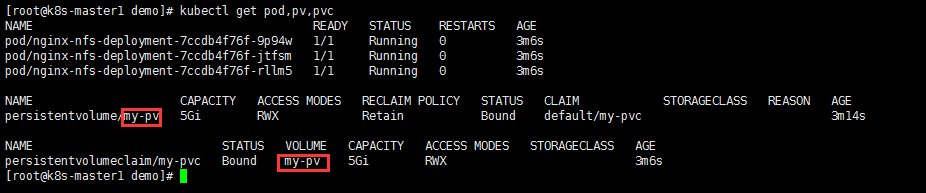

1 | [root@k8s-master1 demo]# kubectl apply -f nfs-pvc.yaml |

1 | [root@k8s-master1 demo]# kubectl exec -it nginx-nfs-deployment-7ccdb4f76f-7m6cp bash |

删除 pvc 和 pv

- 默认情况下 删除的pvc,之前与他绑定的pv也不能够再使用了,需要手动清理pv,但是数据还在

1 | [root@k8s-master1 demo]# kubectl delete -f nfs-pvc.yaml |

1 | # 重新部署应用 , 查看数据 |

总结 PV 静态供给

- 持久卷静态供给,pod需要申请pvc,可以在deployment同一个yaml中定义

- 提供数据卷定义,创建 pv

- pvc会根据绑定关系,尤其是存储的容量和访问模式去匹配pvc

- 这种情况下创建pv需要手动创建,如何可以自动部署绑定

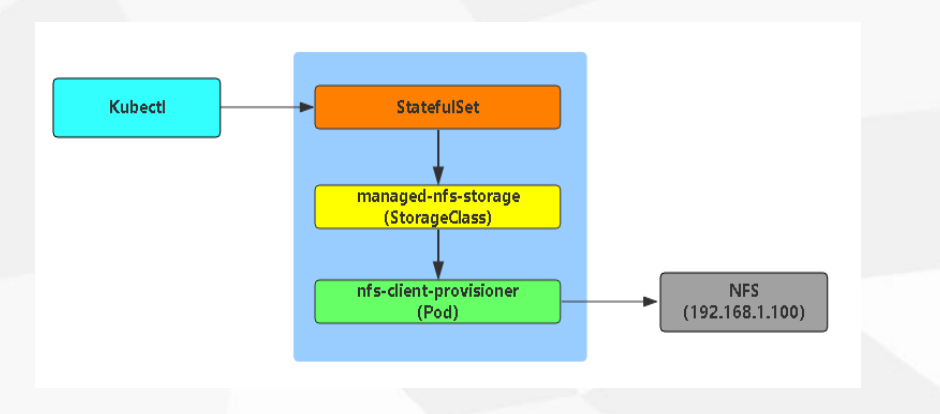

pv 动态供给

- 主要是针对容量问题,手动划分非常麻烦,如果pvc的容量匹配不上pv就无法绑定

- k8s的动态供给就是可以动态划分容量

- Dynamic Provisioning机制工作的核心在于StorageClass的API对象。

- StorageClass声明存储插件,用于自动创建PV。

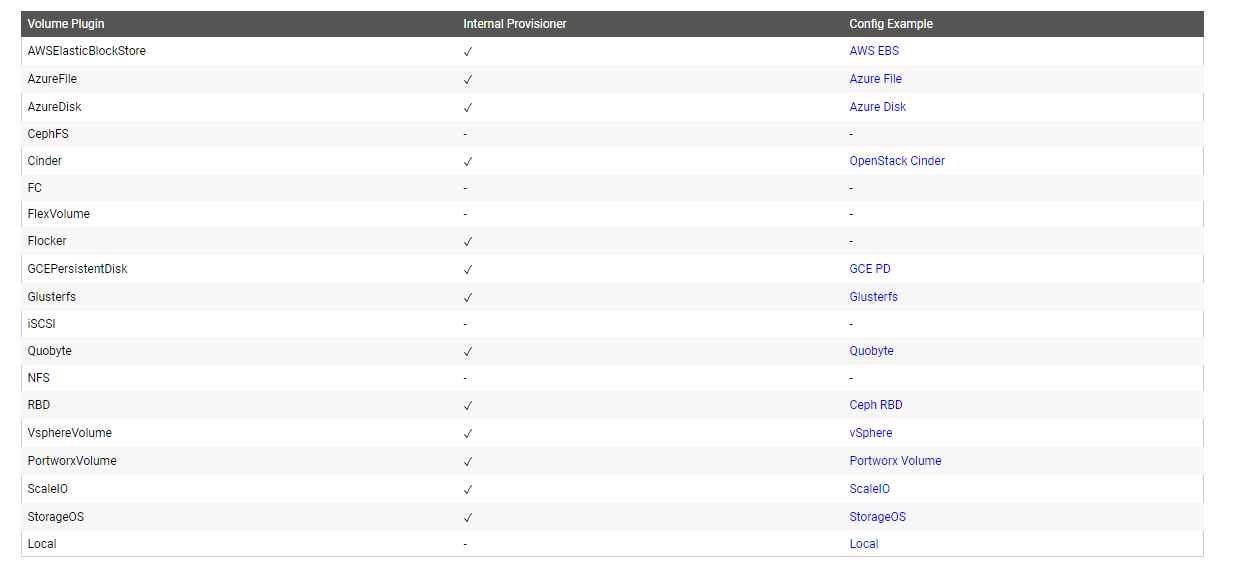

k8s 支持持久卷的存储插件

1 | https://kubernetes.io/docs/concepts/storage/persistent-volumes/ |

StorageClass

- StorageClass 是能够自动操作后端存储,并且自动创建pv 。

- StorageClass 声明使用哪种存储插件,它来对接存储。

- Kubernetes支持动态供给的存储插件:

1 | https://kubernetes.io/docs/concepts/storage/storage-classes/ |

1 | # 上传 storage-class |

StorageClass 定义

1 | [root@k8s-master1 storage-class]# cat storageclass-nfs.yaml |

提供者 自动创建pv

1 | # 该服务帮我们自动创建pv |

1 | 参考地址: |

授权

- 动态创建pv插件需要连接apiserver ,所以需要授权

1 | [root@k8s-master1 storage-class]# cat rbac.yaml |

创建 pv 动态供给

1 | [root@k8s-master1 storage-class]# kubectl apply -f storageclass-nfs.yaml |

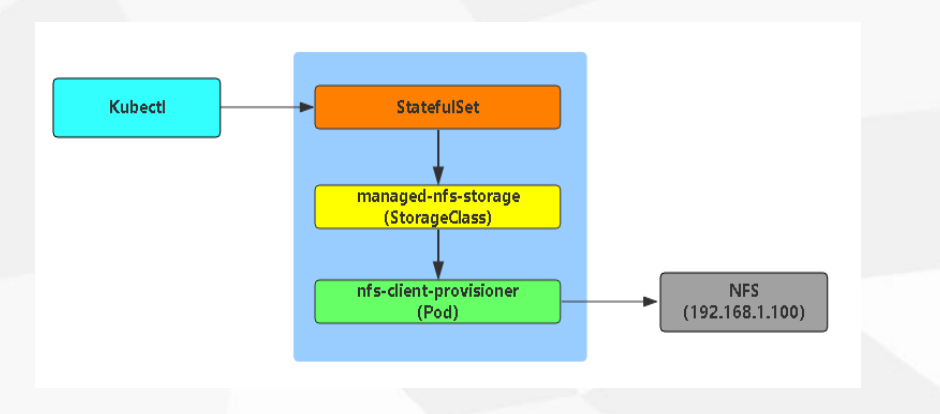

自动供给流程

- kubectl 部署有状态应用,唯一的网路身份标识符,主机名=dns名称和持久存储

- mysql主从的数据不一样的,所以需要存储保持不同的数据

- Statefulset 也会去维护存储,网络身份和存储都标识 0 1 2

- 部署一个应用存储部分会去请求 -> storageclass -> nfs-client-provisioner 这个pod -> 请求nfs创建pv

- 应用里面指定好 使用哪个 storageclass 就行

pv 动态供给应用案例 Statefulset + MySQL

1 | [root@k8s-master1 storage-class]# cat mysql-demo.yaml |

1 | [root@k8s-master1 storage-class]# kubectl get pod,pv,pvc |

创建mysql pod 去测试连接数据库

1 | # mysql 通过dns访问 |

测试删除一个pod 看能否自动挂载存储

1 | [root@k8s-master1 storage-class]# kubectl delete pod db-0 |

删除 pv

1 | 1. 先删除 与其关联的 Pod 及 PVC |

重新建立pv,pvc和 mysql有状态部署

1 | # 如果删除了 pv和pvc 再重新创建pod 是会重新分配存储 |