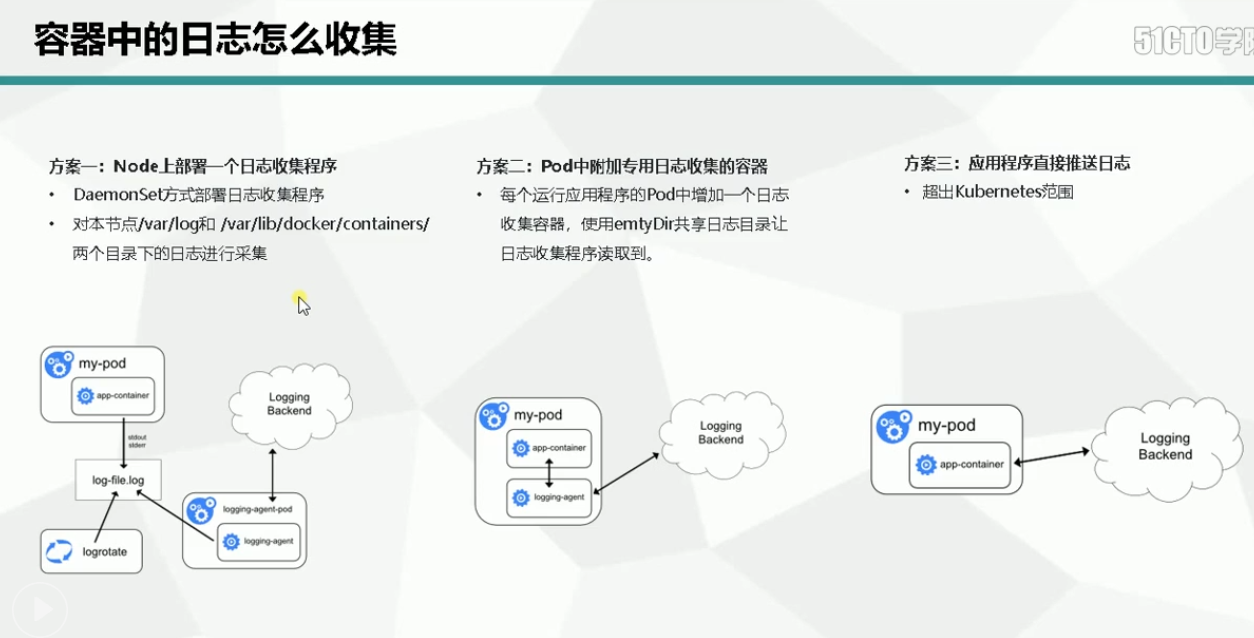

收集哪些日志

- k8s 系统的组件日志

- k8s pod中应用程序日志

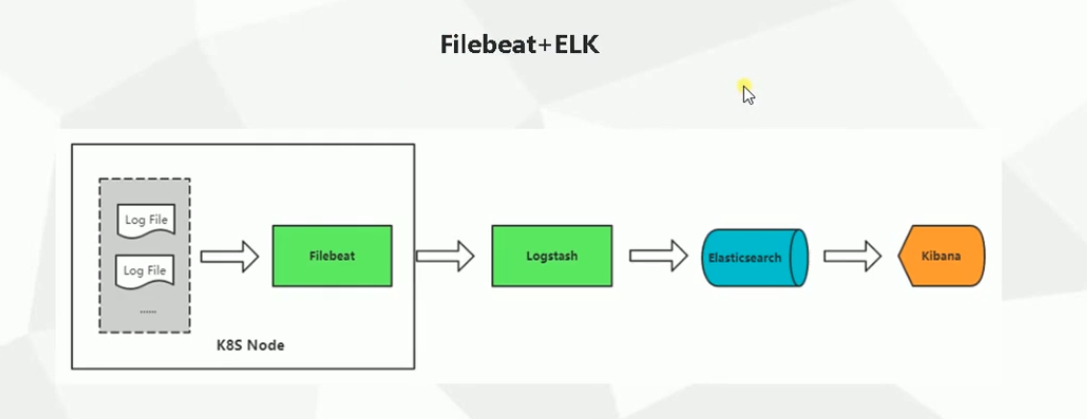

- 主流的日志方案

容器中的日志怎么收集

简易安装 ELK 环境

jdk

1 | # [root@k8s-node3 yum.repos.d]# yum -y remove java-1.8.0-openjdk |

yum 安装 elk 6.8

1 | # JKD 11 |

1 | # 安装 elk |

配置启动 kibana

1 | # 修改配置文件 |

1 | # 启动 kibana |

配置启动 elasticsearch

1 | # 本地部署 保持默认 也没有优化 |

1 | # 启动 |

1 | # 查看服务状态 |

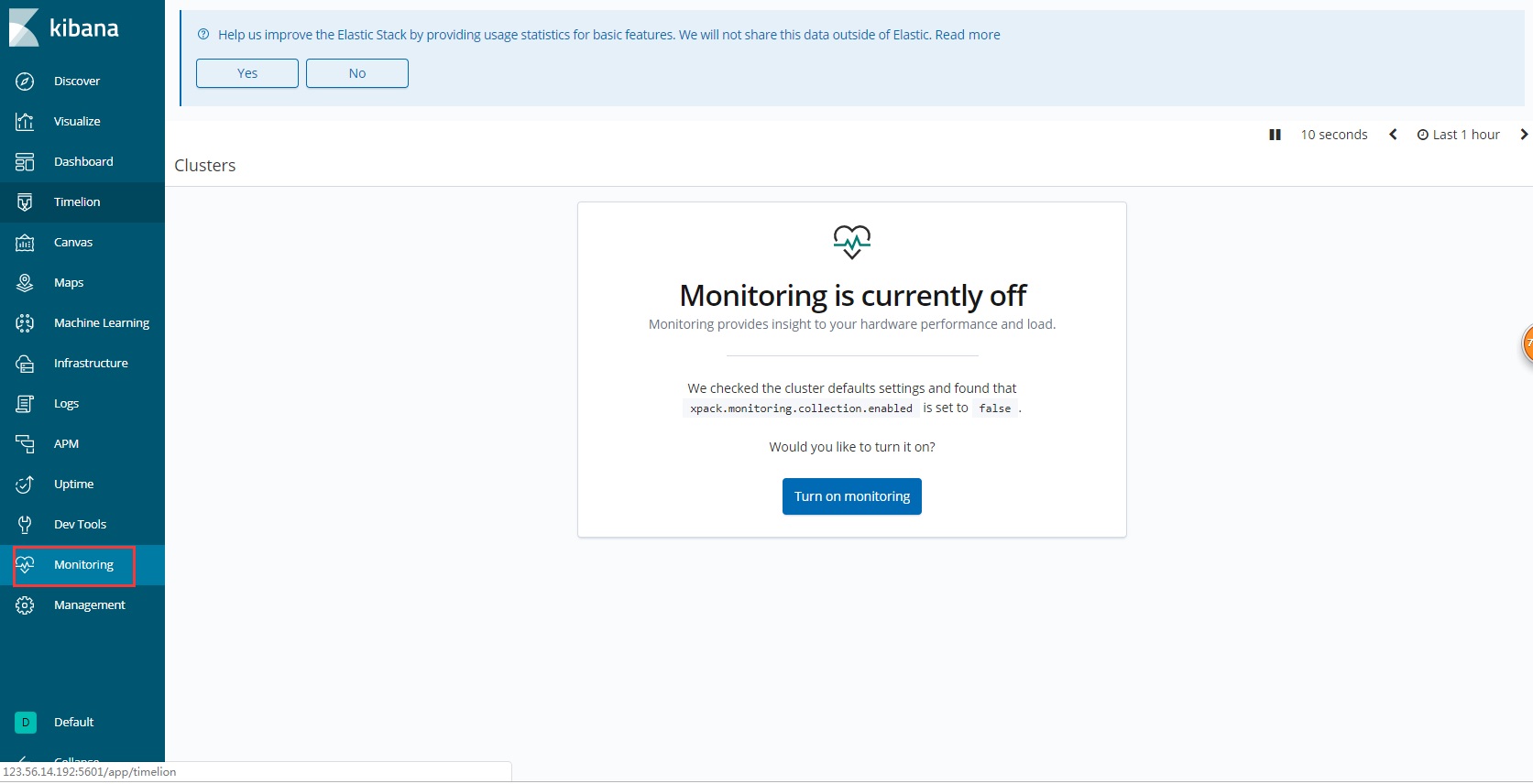

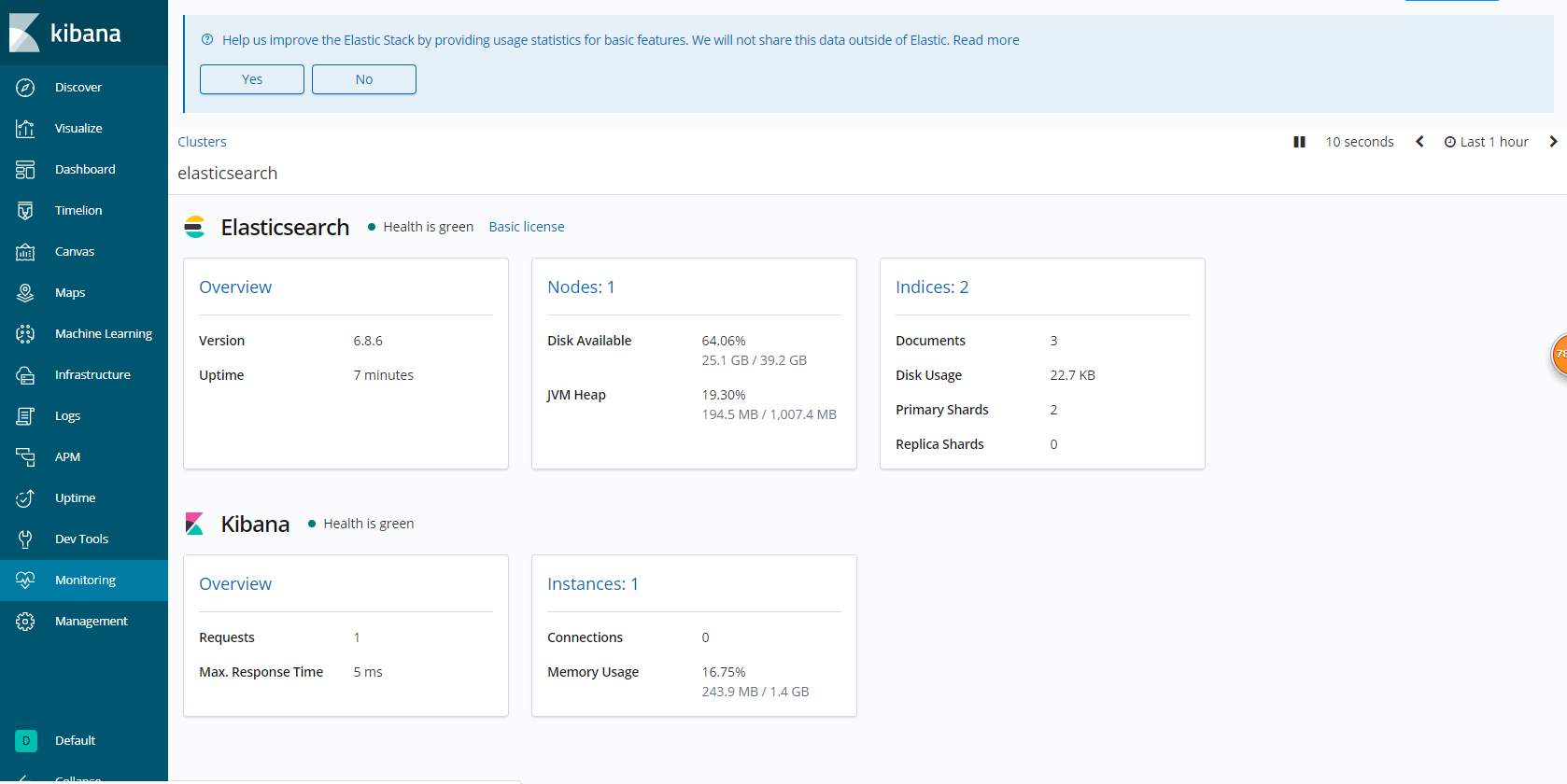

1 | # 点击监控 elasticsearch |

配置 logstash

1 | # 本地启动logstash 监听 filebeat 输出到es和控制台 |

配置 filebeat

1 | # 镜像地址: https://www.docker.elastic.co/# |

1 | [root@k8s-master1 ELK-Logs]# cat k8s-logs.yaml |

1 | # 每个node 都会创建 daemonset 去采集自己下面的k8s组件日志 |

1 | # 查看pod上的日志 |

收集 Nginx 日志

php demo 部署

1 | [root@k8s-master1 php-demo]# kubectl apply -f namespace.yaml |

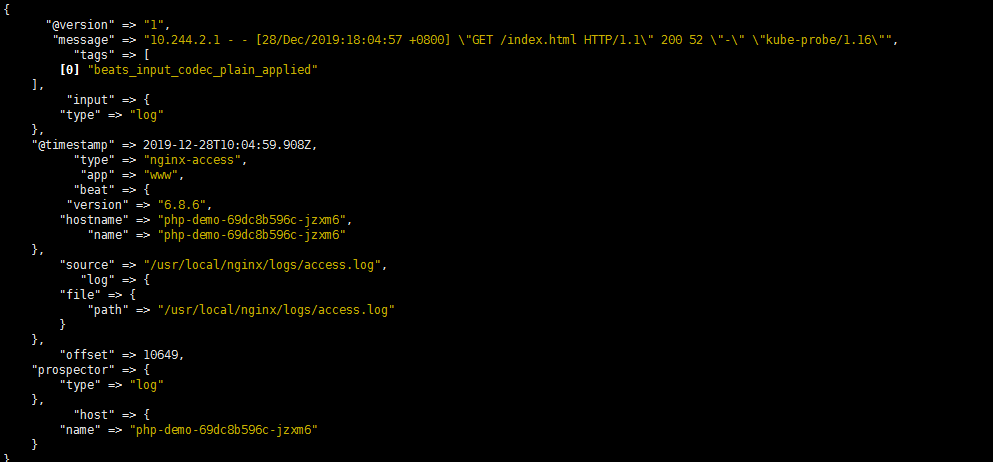

改造 php deployment

1 | # pod里增加一个 filebeat 容器 用来收集日志 |

1 | # 配置文件 |

启动 configmap

1 | [root@k8s-master1 ELK-Logs]# kubectl apply -f filebeat-nginx-configmap.yaml |

滚动更新 deployment

1 | # 变成了 两个容器 |

进入filebeat容器查看

1 | # 多个容器用 -n 指定 |

修改 filebeat 配置文件

1 | # 之前的配置文件中 无论收集什么日志 索引都在 k8s-log-日期 |

1 | [root@k8s-node3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-to-es.conf |

1 | # filebeat 命令行也应该正常输出 |

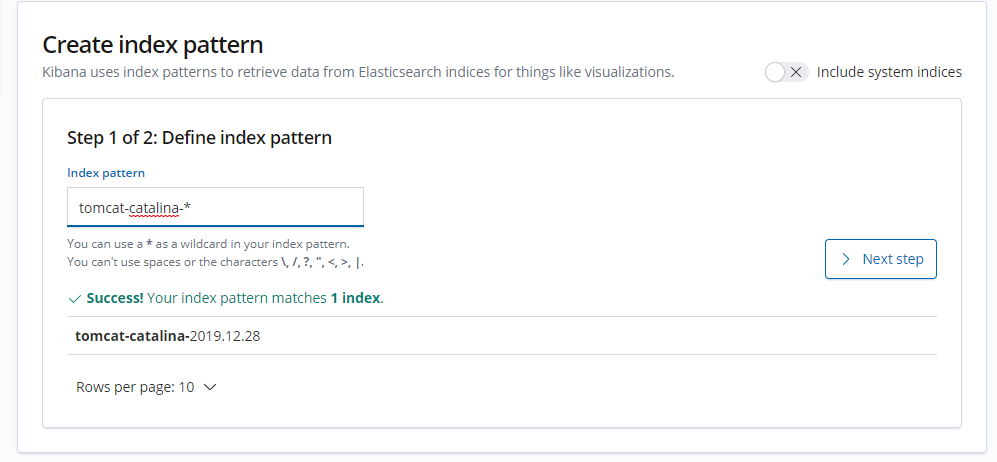

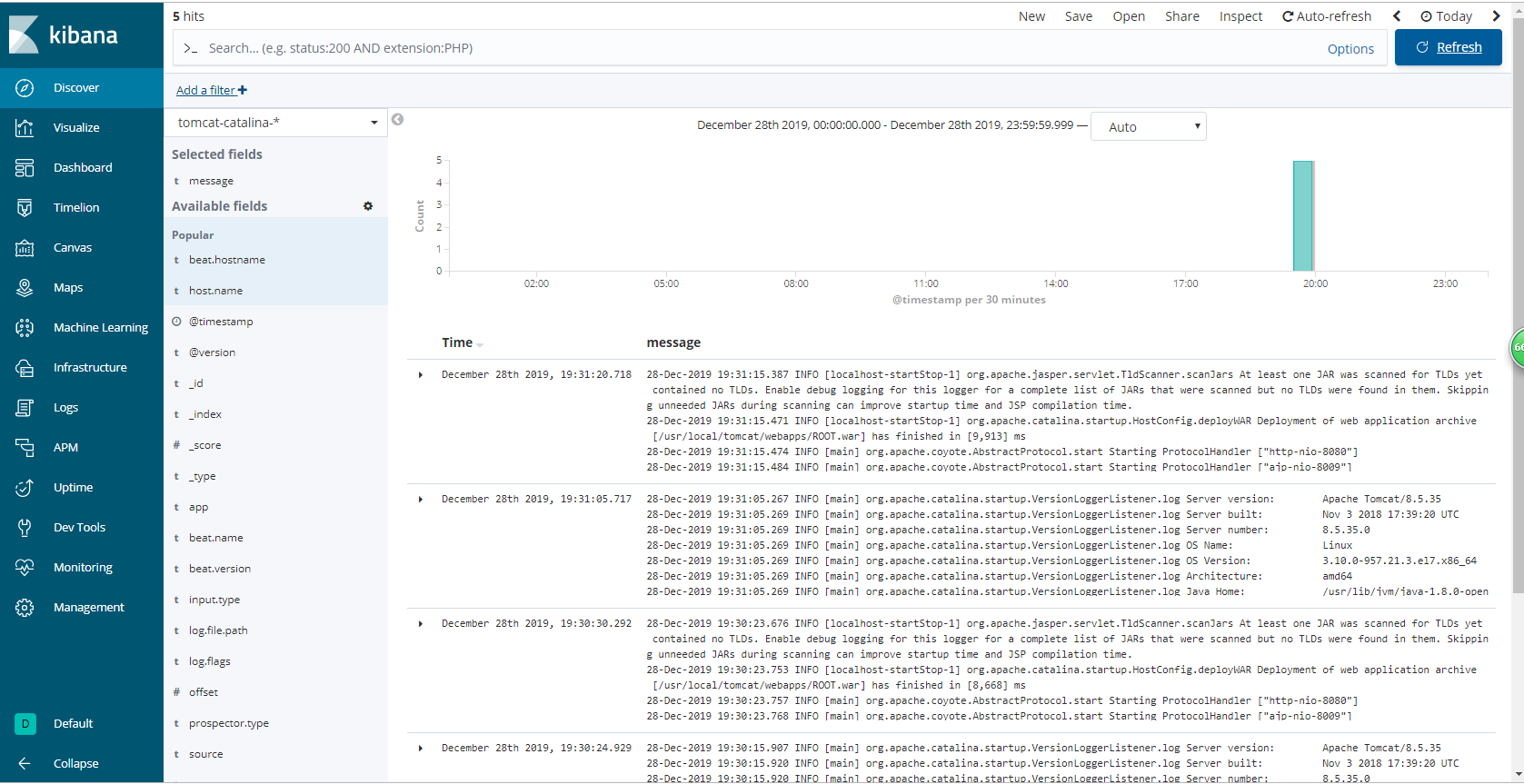

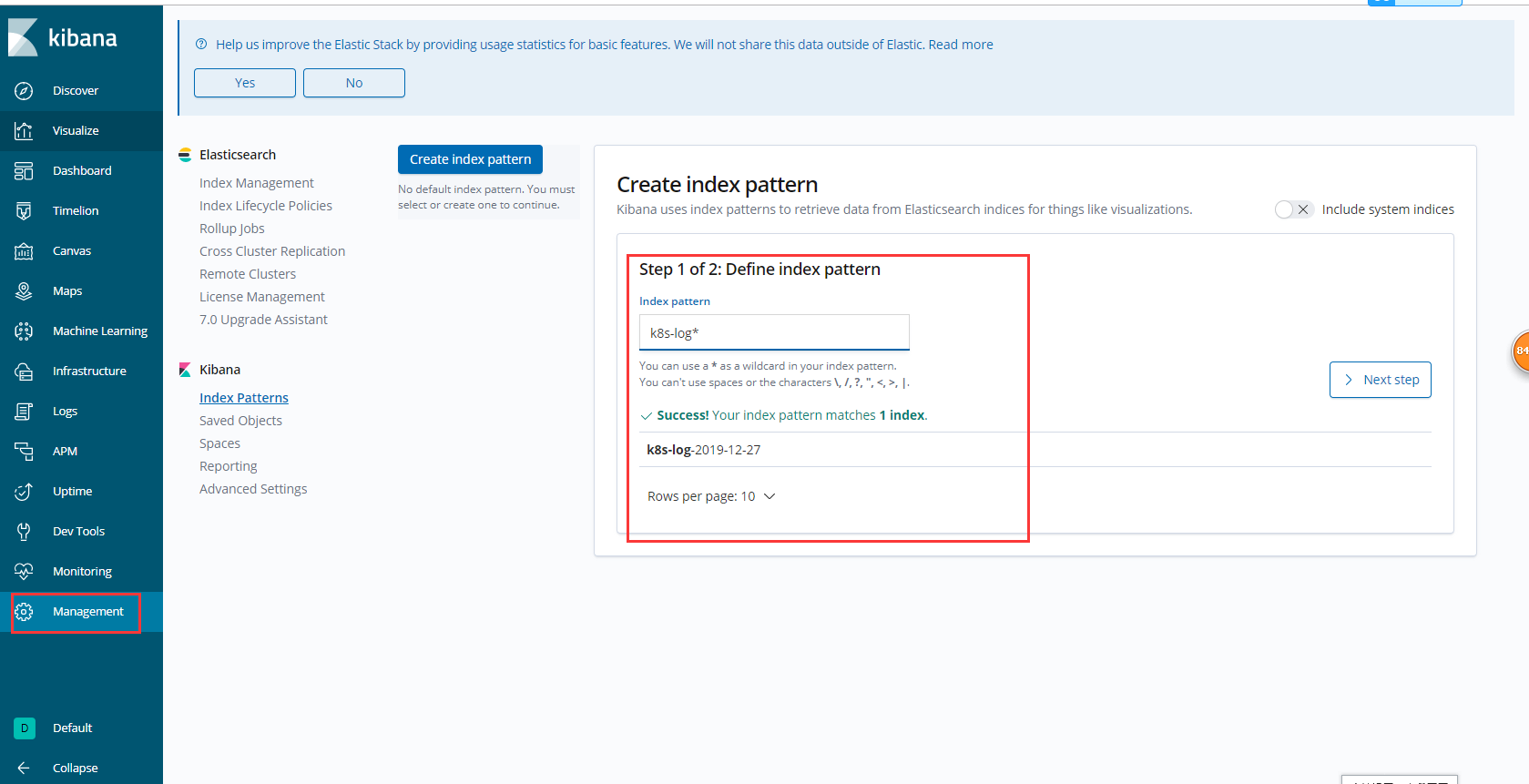

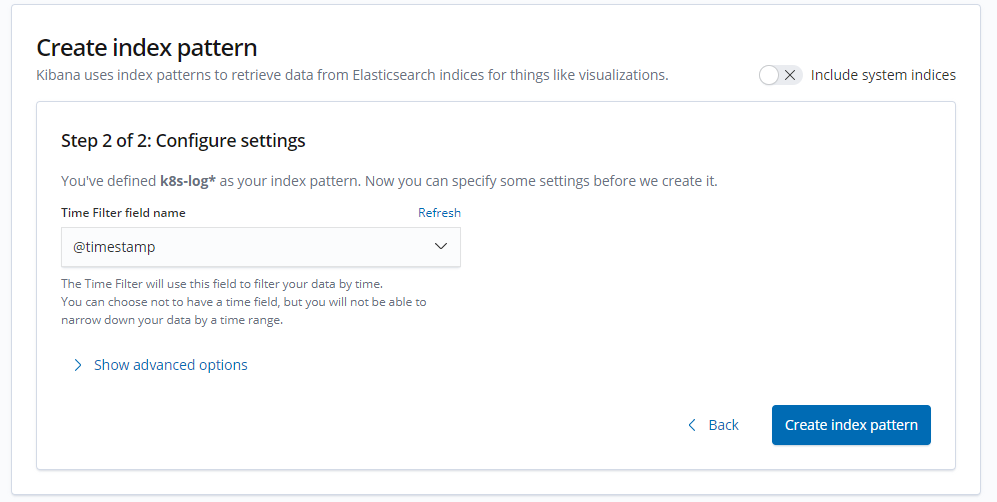

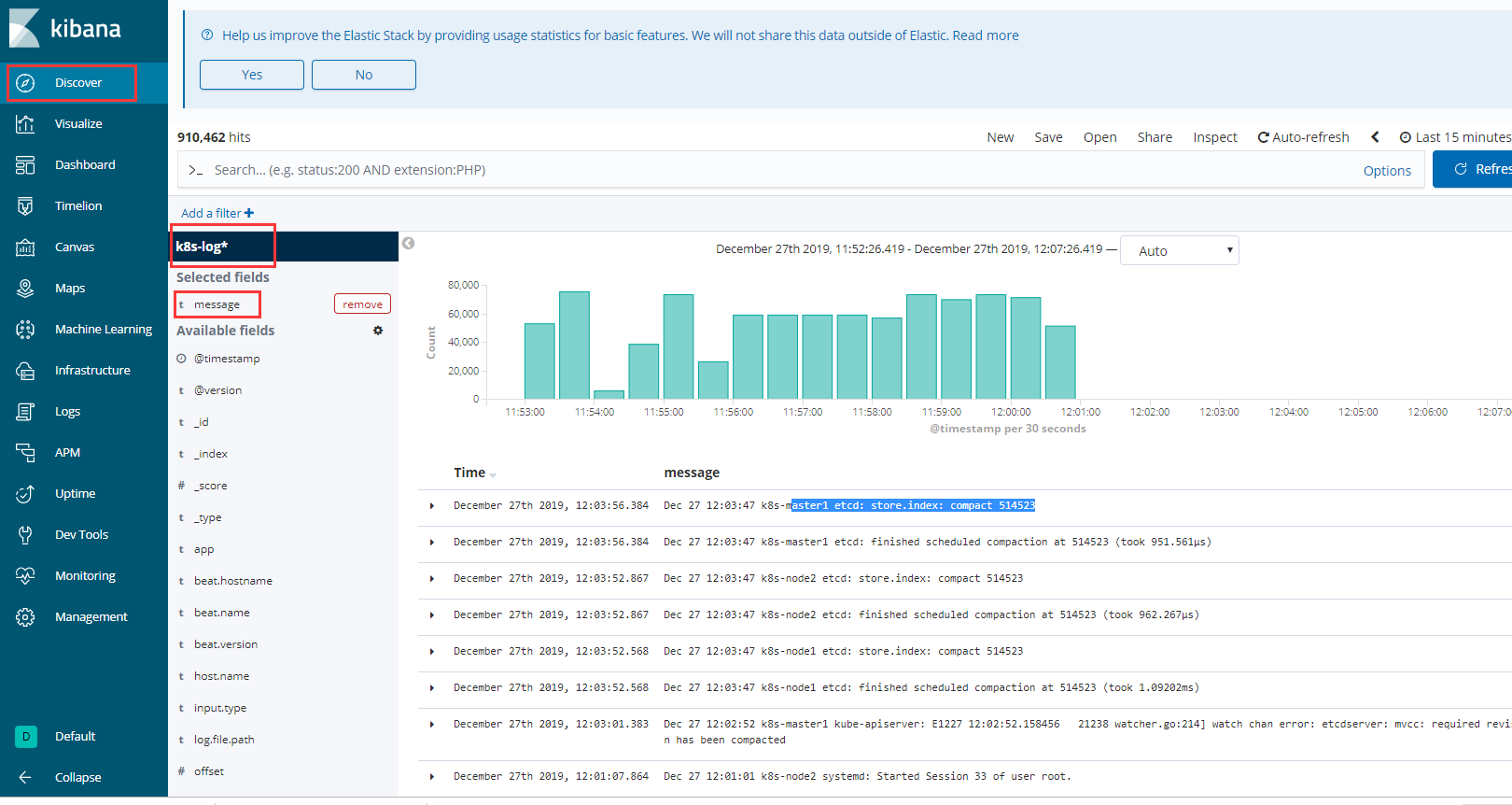

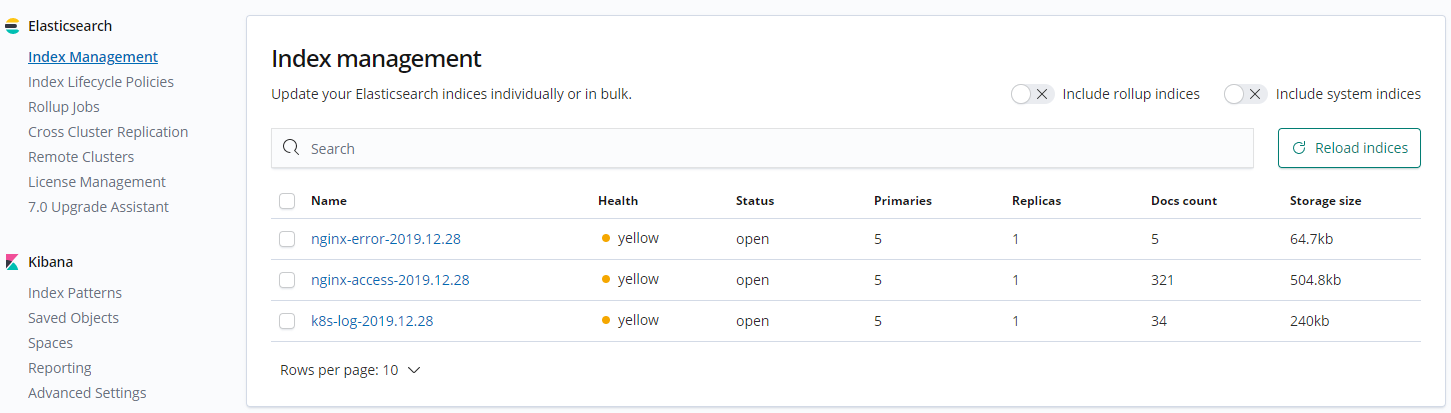

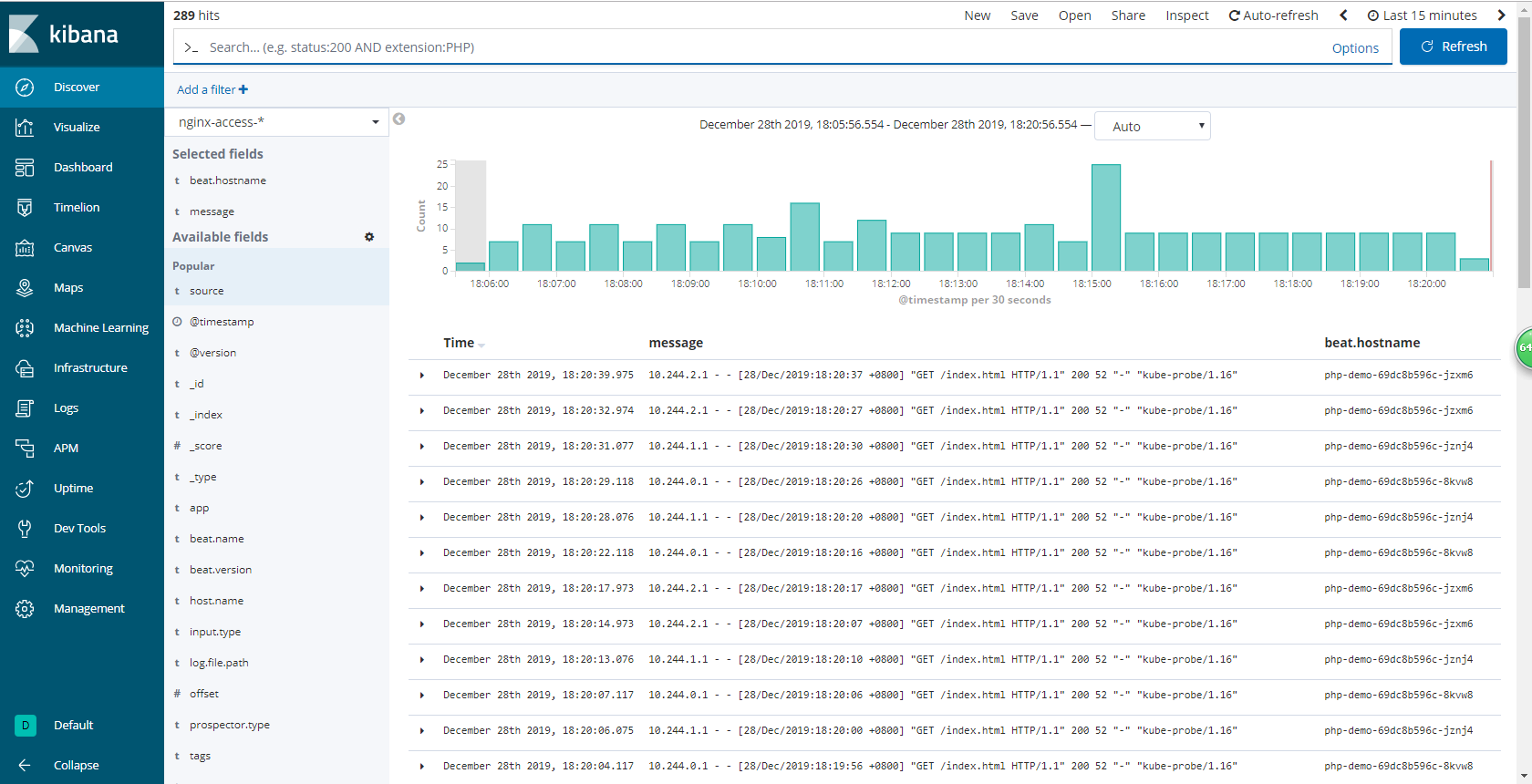

kibana 配置

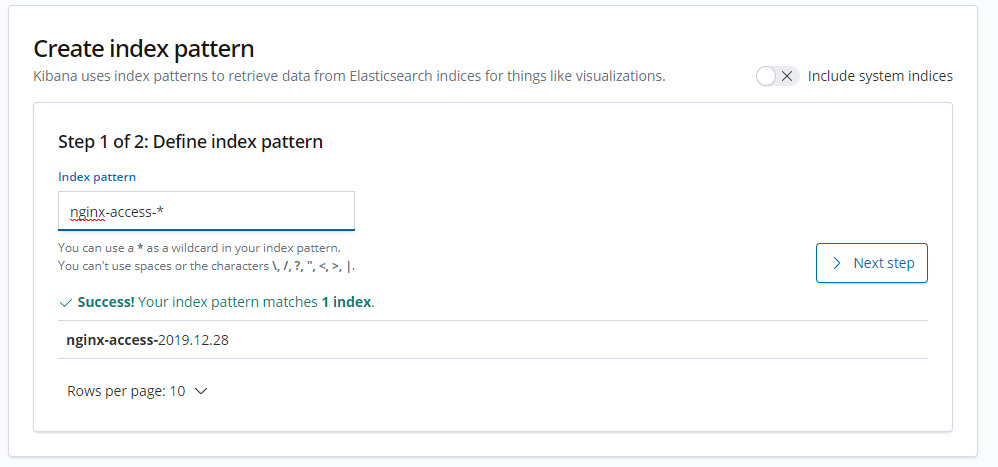

1 | # 查看 索引管理 |

1 | # 配置索引 |

1 | # 刷新页面 查看数据 |

收集 Tomcat 日志

启动 tomcat 项目

1 | # 数据存储 mysql 使用同一个 |

更新 deployment

1 | [root@k8s-master1 ELK-Logs]# cat tomcat-deployment.yaml |

filebeat 配置文件

1 | [root@k8s-master1 ELK-Logs]# cat filebeat-tomcat-configmap.yaml |

更新部署

1 | [root@k8s-master1 ELK-Logs]# kubectl apply -f filebeat-tomcat-configmap.yaml |

建立索引 查看日志

1 | 1. 部署jar包 原理一样 部署filebeat |